Oh my God! CURSORs are comin’!

Oh my God! CURSORs are comin’! Are we going to let ’em set foot in this town?

Hell No!!!

We don’t want their kind ’round these parts!

We gotta protect our wives and kin!

So grab yer pitchforks and torches! We ain’t gonna stand for it anymore! Let’s teach ’em a thing or two!

Kill the beasts!

I wanted to open this blog article with the same kind of flavor that I’ve seen in most blog posts regarding cursors. In other words: They are bad… they are evil… they are tools of the Devil! That’s all there is to it. Don’t ever use them under any circumstances or else you will burn in Hell.

Yes, of course a set-based approach is the preferred way to approach a problem… if a set-based approach can be found. Sometimes there are business problems that can only be solved by processing a row at a time, and that’s where cursors come into play. Itzik Ben-Gan gives a very mature argument about this in his T-SQL Programming book from the Inside SQL Server series. But there are still many out there who have such a hatred and/or phobia of cursors that they will trash them in their blogs.

Here’s what they will typically do… A demonstration of why cursors are bad. Okay, here it goes…

Create a table with a million rows (with a primary key clustered index):

use TestDBNow let’s put together some code to use a cursor to go through those million rows one row at a time in clustered key order:

go

if object_ID('TestTable','U') is not null drop table TestTable

go

create table TestTable

(

ID int identity(1,1) primary key

,Column1 varchar(50)

,Column2 varchar(50)

)

go

with

L0(c) as (select 0 from (values (0),(0),(0)) x(c)) /* 3 Rows */

,L1(c) as (select 0 from L0 a,L0 b,L0 c) /* 27 Rows=3x3x3 */

,L2(c) as (select 0 from L1 a,L1 b,L1 c) /* 19683 Rows=27x27x27 */

,L3(c) as (select 0 from L2 a,L2 b) /* 387,420,489 Rows=19683x19683 */

,NN(n) as (select row_number() over (order by (select 0)) from L3)

insert TestTable (Column1,Column2)

select newid(),newid()

from NN

where n<=1000000

declare @ID intAnd now let’s show our superiority by putting together some code to read the rows one at a time in a non-cursor based mode. In fact, we’ll do it in a dumb way by retrieving the next ID in the clustered index using a MIN() aggregate and then, once we have that, we’ll use that ID to lookup the entire row’s columns. Of course, we could get all the row’s columns in a single SQL statement (using TOP instead of a MIN() aggregate), but we’ll do it in this inefficient way just to prove a point:

,@Column1 varchar(50)

,@Column2 varchar(50)

declare c cursor

for select ID,Column1,Column2

from TestTable

order by ID

open c

while 1=1

begin

fetch c into @ID,@Column1,@Column2

if @@fetch_status<>0 break

/* We would do something with the data here */

end

close c

deallocate c

declare @ID intNow how does that pathetic cursor compare to our far superior code?

,@Column1 varchar(50)

,@Column2 varchar(50)

declare @CurrID int

,@NextID int

select @CurrID = -2147483648 /* Initialize to lowest possible ID */

while 1=1

begin

/* Get Next Sequential ID */

select @NextID=(select min(ID)

from TestTable

where ID>@CurrID)

if @NextID is null break

/* Retrieve row */

select @ID=ID

,@Column1=Column1

,@Column2=Column2

from TestTable

where ID=@NextID

/* We would do something with the data here */

select @CurrID=@ID /* Save Current ID */

end

/*

Description of Method CPU Reads Writes Duration

------------------------------------------------------------------------

CURSOR Approach 46120ms 4,011,375 0 52210ms

Non-CURSOR Approach using MIN(ID) 39750ms 6,011,372 0 46021ms <--Winner

*/

Here’s the part where we look down our noses and laugh hysterically and point to the bad performance of the cursor and say, “See? I told you. Cursors really suck!”There… I’ve given you a synopsis of hundreds of blog articles that have been posted over the last umpteen years.

Here’s the part they left out, though. (They didn’t leave it out on purpose… they just didn’t dig deep enough).

Go back to the cursor code and add one single keyword to the cursor declaration… the word STATIC… and run it again:

declare @ID intNow how does that compare to our other tests?

,@Column1 varchar(50)

,@Column2 varchar(50)

declare c cursor static

for select ID,Column1,Column2

from TestTable

order by ID

open c

while 1=1

begin

fetch c into @ID,@Column1,@Column2

if @@fetch_status<>0 break

/* We would do something with the data here */

end

close c

deallocate c

/*

Description of Method CPU Reads Writes Duration

------------------------------------------------------------------------

CURSOR Approach (No Keywords) 46120ms 4,011,375 0 52210ms

CURSOR Approach (STATIC) 23906ms 6,061,368 11,817 25068ms <--Winner

Non-CURSOR Approach using MIN(ID) 39750ms 6,011,372 0 46021ms

*/

Who’s laughing now? Which approach is fastest now? Huh?Okay, let’s be fair. Let’s make our non-cursor based approach a little more intelligent by doing a TOP 1 instead of the inefficient MIN(ID) approach:

declare @ID intNow how does everything measure up?

,@Column1 varchar(50)

,@Column2 varchar(50)

declare @CurrID int

select @CurrID = -2147483648 /* Initialize to lowest possible ID */

while 1=1

begin

select top 1 @ID=ID

,@Column1=Column1

,@Column2=Column2

from TestTable

where ID>@CurrID

order by ID

if @@rowcount=0 break

/* We would do something with the data here */

select @CurrID=@ID /* Save Current ID */

end

/*

Description of Method CPU Reads Writes Duration

------------------------------------------------------------------------

CURSOR Approach (No Keywords) 46120ms 4,011,375 0 52210ms

CURSOR Approach (STATIC) 23906ms 6,061,368 11,817 25068ms <--Winner

Non-CURSOR Approach using MIN(ID) 39750ms 6,011,372 0 46021ms

Non-CURSOR Approach using TOP 1 20286ms 3,011,368 0 27137ms

*/

Which approach is fastest? Huh? Come on, you can say it. It won’t kill you. That’s right. The STATIC cursor approach is the fastest.In order to understand why the STATIC cursor outperformed the other approaches, we have to look at cursors more closely and see how they work under the hood. Cursors are defined by scope (LOCAL or GLOBAL), by direction (FORWARD_ONLY or SCROLL), by type (STATIC, KEYSET, DYNAMIC, and FAST_FORWARD) , and by concurrency (READ_ONLY, SCROLL_LOCKS, and OPTIMISTIC). I only want to concentrate on the latter two: type and concurrency.

Let’s look at the types of cursors first, but for simplicity’s sake, we will look at them only with a READ_ONLY concurrency for now. A little later in this article, we will see how the other concurrency settings change things. The cursors we test out below will also be defined as LOCAL in scope and FORWARD_ONLY in direction in our testing.

STATIC Cursors

A STATIC cursor makes a copy of the data (i.e. the columns we specified in the DECLARE CURSOR command) into a temporary clustered index table in TempDB called CWT_PrimaryKey behind the scenes before it does any FETCHing at all. (I presume that CWT stands for Cursor Work Table). When we do FETCH from the cursor, the data comes from that temporary clustered index and NOT from the original table. You can see this by executing the following code, which just does an OPEN and a single FETCH…

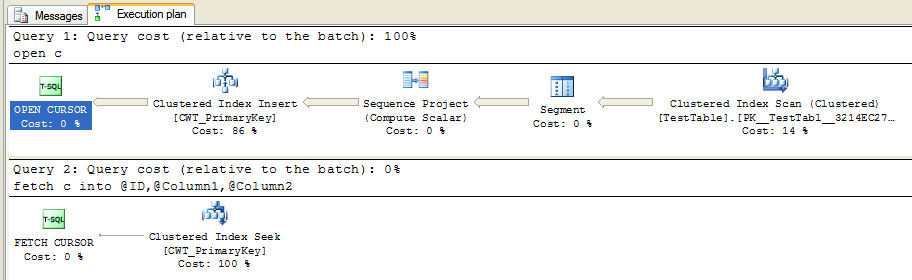

declare @ID int… and now look at the Actual Execution Plans for the OPEN and FETCH:

,@Column1 varchar(50)

,@Column2 varchar(50)

declare c cursor local forward_only static read_only

for select ID,Column1,Column2

from TestTable

order by ID

open c

fetch c into @ID,@Column1,@Column2

close c

deallocate c

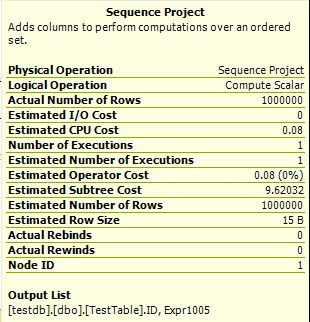

Looking at the properties of the Sequence Projection Operator of the OPEN plan, we can see in the Output List that all of the columns get fed to the Clustered Index Insert Operator:

And in the FETCH plan, we can see that the data we retrieve comes directly from that CWT_PrimaryKey temp table and no longer touches the original table at all.

Notice the Segment Operator and the Sequence Projection Operator in OPEN plan? That indicates a window function… specifically a ROW_NUMBER() calculation (that's the Expr1005 column in the Output List). I can duplicate this same thing in T-SQL like so:

if object_ID('tempdb..#CWT_PrimaryKey','U') is not null drop table #CWT_PrimaryKeyAnd here is the Actual Plan for that… Notice the similarity with the STATIC cursor’s OPEN plan:

create table #CWT_PrimaryKey (SeqNo bigint primary key

,ID int

,Column1 varchar(50)

,Column2 varchar(50))

insert #CWT_PrimaryKey

select SeqNo=row_number() over (order by ID)

,ID=ID

,Column1

,Column2

from TestTable

(If you’re wondering about the TOP operator, it’s a ROWCOUNT Top… it just makes sure that any SET ROWCOUNT setting is honored when INSERTing rows into the temp table.)

In fact, we can write T-SQL code to process our million-row TestTable, emulating the behavior of a STATIC cursor:

if object_ID('tempdb..#CWT_PrimaryKey','U') is not null drop table #CWT_PrimaryKeyHow does this compare with all the other methods so far in processing the million-row table?:

create table #CWT_PrimaryKey (SeqNo bigint primary key

,ID int

,Column1 varchar(50)

,Column2 varchar(50))

insert #CWT_PrimaryKey

select SeqNo=row_number() over (order by ID)

,ID=ID

,Column1

,Column2

from TestTable

declare @SeqToFetch int

set @SeqToFetch=0

while 1=1

begin

set @SeqToFetch=@SeqToFetch+1

select @ID=ID

,@Column1=Column1

,@Column2=Column2

from #CWT_PrimaryKey

where SeqNo=@SeqToFetch

if @@rowcount=0 break

/* We would do something with the data here */

end

drop table #CWT_PrimaryKey

/*

Description of Method CPU Reads Writes Duration

---------------------------------------------------------------------------

CURSOR Approaches:

No Keywords Specified 46120ms 4,011,375 0 52210ms

STATIC READ_ONLY 23906ms 6,061,368 11,817 25068ms <--Winner

Non-CURSOR Approaches:

MIN(ID) 39750ms 6,011,372 0 46021ms

TOP 1 20286ms 3,011,368 0 27137ms

Emulating STATIC CURSOR 23849ms 3,031,241 12,376 33659ms

*/

So, even though we’ve written code to do exactly what a STATIC cursor does, the cursor itself is still faster. Hmmm…KEYSET Cursors

A KEYSET cursor is similar to a STATIC one in the sense that it creates a temporary clustered index table of values behind the scenes. But this time, it only contains the column(s) that make up the primary key to the original table and ignores the rest of the columns. When we do a FETCH from a KEYSET cursor, it retrieves those primary key column(s) from the CWT_PrimaryKey temp table and uses that to do a lookup into the original table to get the rest of the columns that we requested.

Here’s what the Actual Execution Plan looks like for a KEYSET cursor when we OPEN it and FETCH one row:

The OPEN plan looks exactly like the STATIC cursor’s OPEN plan. The only difference is what gets put into the CWT_PrimaryKey temp table. Notice that the only columns being fed to the Clustered Index Insert Operator are the ID (the original table’s Primary Key) and the ROW_NUMBER() value that the Sequence Projection Operator created:

Again, we can write code to emulate the behavior of a KEYSET cursor:

declare @ID intNow let’s collect Profiler statistics on both the KEYSET cursor and our code that emulates it and see how they compare to all our other approaches so far in processing our million-row table:

,@Column1 varchar(50)

,@Column2 varchar(50)

if object_ID('tempdb..#CWT_PrimaryKey','U') is not null drop table #CWT_PrimaryKey

create table #CWT_PrimaryKey (SeqNo bigint primary key

,ID int)

insert #CWT_PrimaryKey

select SeqNo=row_number() over (order by ID)

,ID=ID

from TestTable

declare @SeqToFetch int

set @SeqToFetch=0

while 1=1

begin

set @SeqToFetch=@SeqToFetch+1

select @ID=ID

,@Column1=Column1

,@Column2=Column2

from TestTable

where ID=(select ID from #CWT_PrimaryKey where SeqNo=@SeqToFetch)

if @@rowcount=0 break

/* We would do something with the data here */

end

drop table #CWT_PrimaryKey

/*

Description of Method CPU Reads Writes Duration

---------------------------------------------------------------------------

CURSOR Approaches:

No Keywords Specified 46120ms 4,011,375 0 52210ms

STATIC READ_ONLY 23906ms 6,061,368 11,817 25068ms <--Winner

KEYSET READ_ONLY 36578ms 8,787,165 2,110 38158ms

Non-CURSOR Approaches:

MIN(ID) 39750ms 6,011,372 0 46021ms

TOP 1 20286ms 3,011,368 0 27137ms

Emulating STATIC CURSOR 23849ms 3,031,241 12,376 33659ms

Emulating KEYSET CURSOR 34297ms 6,020,084 2,608 39571ms

*/

Hmmm… DYNAMIC Cursors

We’ve seen that STATIC and KEYSET cursors have a population phase where a temp table is loaded with some information when the cursor is OPENed. DYNAMIC cursors do not create any temp table, but rather read directly from the original table.

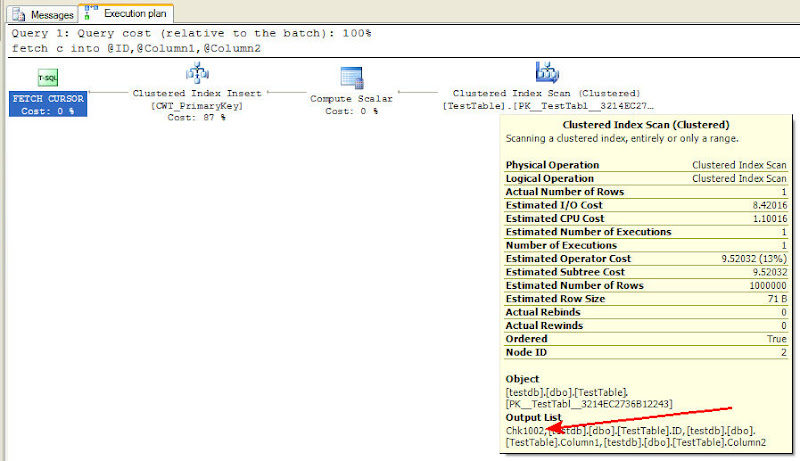

The Actual Execution Plan for OPENing a DYNAMIC READ_ONLY cursor and FETCHing one row looks like this:

So the OPEN doesn’t really do anything plan-wise at all. The FETCH just retrieves its information directly from the source table based on an internal record pointer. The Compute Scalar Operator is just computing a constant value of 1. To be honest, I don’t know what that is for… perhaps it’s just a flag of some kind indicating that this is a DYNAMIC cursor FETCH.

The closest we can come to emulating a DYNAMIC cursor’s behavior is using the method we demonstrated already towards the beginning of this article… by doing a SELECT TOP 1 WHERE ID>@CurrID in a loop.

So how does the DYNAMIC cursor do in processing our million-row table:

/*

Description of Method CPU Reads Writes Duration

---------------------------------------------------------------------------

CURSOR Approaches:

No Keywords Specified 46120ms 4,011,375 0 52210ms

STATIC READ_ONLY 23906ms 6,061,368 11,817 25068ms <--Winner

KEYSET READ_ONLY 36578ms 8,787,165 2,110 38158ms

DYNAMIC READ_ONLY 24270ms 1,011,368 0 29539ms

Non-CURSOR Approaches:

MIN(ID) 39750ms 6,011,372 0 46021ms

Emulating STATIC CURSOR 23849ms 3,031,241 12,376 33659ms

Emulating KEYSET CURSOR 34297ms 6,020,084 2,608 39571ms

TOP 1 [Kind of emulates DYNAMIC] 20286ms 3,011,368 0 27137ms

*/

Wow! The DYNAMIC cursor did really well compared to many of the other approaches. It comes in 3rd place in terms of duration, just behind our SELECT TOP 1 approach and the STATIC cursor, which is still the front-runner.FAST_FORWARD Cursors

Books Online states that FAST_FORWARD cursors are special cursors with “performance optimizations enabled.” In reality, from what I can tell, a FAST_FORWARD cursor is really not its own type of cursor at all. The Optimizer actually makes a judgement call and decides which of the other three cursor types (STATIC or KEYSET or DYNAMIC) to use… in other words, it makes the decision for you.

Here’s the Actual Execution Plan for a FAST_FORWARD cursor when we OPEN it and FETCH one row:

It’s exactly like the DYNAMIC cursor’s plan, except it doesn’t have that mysterious Compute Scalar Operator.

And, sure enough, if we run a FAST_FORWARD cursor on our million-row table, its statistics are practically a clone of the DYNAMIC cursor’s statistics:

/*

Description of Method CPU Reads Writes Duration

---------------------------------------------------------------------------

CURSOR Approaches (LOCAL FORWARD_ONLY):

No Keywords Specified 46120ms 4,011,375 0 52210ms

STATIC READ_ONLY 23906ms 6,061,368 11,817 25068ms <--Winner

KEYSET READ_ONLY 36578ms 8,787,165 2,110 38158ms

DYNAMIC READ_ONLY 24270ms 1,011,368 0 29539ms

FAST_FORWARD READ_ONLY 24328ms 1,011,368 0 29632ms

Non-CURSOR Approaches:

MIN(ID) 39750ms 6,011,372 0 46021ms

Emulating STATIC CURSOR 23849ms 3,031,241 12,376 33659ms

Emulating KEYSET CURSOR 34297ms 6,020,084 2,608 39571ms

TOP 1 [Kind of emulates DYNAMIC] 20286ms 3,011,368 0 27137ms

*/

I imagine the DYNAMIC approach is chosen by the optimizer because it figures that it is more cost effective to not incur the overhead of the STATIC population of a temp table up front. However, the bottom line is that despite the number of Reads and Writes involved, the STATIC cursor still beats out all other approaches in terms of Duration.So all of you who have blindly used FAST_FORWARD cursors may want to investigate whether a STATIC cursor might work better for you.

Cursor Concurrency

Now we will briefly discuss the 3 types of concurrency you can define for a cursor: READ_ONLY, SCROLL_LOCKS, and OPTIMISTIC.

A cursor that is READ_ONLY is self-explanatory. If we define a cursor as READ_ONLY, we cannot perform an UPDATE or DELETE through the cursor via the WHERE CURRENT OF clause of those commands.

If a cursor is defined with SCROLL_LOCKS, then any row that is FETCHed is automatically locked so that any subsequent UPDATE or DELETE will be guaranteed to succeed. Not only that… but when the row is FETCHed, its Current Row Pointer and Primary Key are inserted into our friend the CWT_PrimaryKey temp table.

This is because when we perform an UPDATE with the WHERE CURRENT OF clause, the system will SEEK into CWT_PrimaryKey based on the Current Row Pointer that the cursor is processing, and use the primary key that had been stored there to do a lookup into the original table, and then finally UPDATE the table.

If you run the following code to OPEN a DYNAMIC SCROLL_LOCKS cursor, FETCH one row, and then UPDATE a column for that row via the WHERE CURRENT OF clause…

declare @ID int…here’s what the Actual Execution Plan looks like:

,@Column1 varchar(50)

,@Column2 varchar(50)

declare c cursor local forward_only dynamic scroll_locks

for select ID,Column1,Column2

from TestTable

order by ID

open c

fetch c into @ID,@Column1,@Column2

update TestTable set Column1='XYZ' where current of c

close c

deallocate c

So the UPDATE WHERE CURRENT OF is pretty much doing something like this:

update TestTableNow we get to an OPTIMISTIC cursor. Unlike SCROLL_LOCKS, an OPTIMISTIC cursor does not guarantee that subsequent UPDATEs or DELETEs will succeed. It does not lock the row that had just been FETCHed.

set Column1='XYZ'

where ID=(select ID

from CWT_PrimaryKey

where RowID = <CurrentRowOfCursor>)

Like the SCROLL_LOCKS cursor, though, an OPTIMISTIC cursor saves the Current Row Pointer and Primary Key into the CWT_PrimaryKey temp table. But in addition to those, a CHECKSUM of all the columns of the row is calculated and stored in there as well. You can see this CHECKSUM value as a column being passed along from the original table:

Then, when an UPDATE is done with the WHERE CURRENT OF clause, the system recalculates the CHECKSUM of the row in the original table and only performs the UPDATE if that value is equal to the one that had been stored in CWT_PrimaryKey. In other words, if some other connection successfully performed an UPDATE on the row between the time we FETCHed it and the time we attempted to UPDATE it, then we would get an error upon UPDATE because the CHECKSUM value changed during that time.

The pseudo-code for an UPDATE to a DYNAMIC OPTIMISTIC cursor, then, is something like this:

update TestTableI’ll leave it to you to investigate the Actual Execution Plan for this type of cursor.

set Column1='XYZ'

where ID=(select ID

from CWT_PrimaryKey

where RowID = <CurrentRowOfCursor>)

and checksum(ID,Column1,Column2)=(select CheckSumValue

from CWT_PrimaryKey

where RowID = <CurrentRowOfCursor>)

if @@rowcount=0

begin

/* ERROR! */

end

Now Here’s What They Don’t Tell You

So you can see that an OPTIMISTIC cursor has to calculate a CHECKSUM on every single column and store it, along with some key information, into a CWT_PrimaryKey temp table for every single FETCH we perform, because it has to assume that we might want to subsequently perform an UPDATE or DELETE.

And guess what?

When you do a DECLARE CURSOR without specifying any keywords whatsoever, it defaults to a DYNAMIC OPTIMISTIC cursor!

All those blogs that demonstrate the crappy performance of cursors never declare the cursor with any keywords! So they end up using a DYNAMIC OPTIMISTIC one, which, as you now know, does a bunch of work. So is it any surprise that the performance is bad?

But now you know that a STATIC cursor can be the best performer… even better than the beloved FAST_FORWARD cursor… if you don’t mind the overhead of creating the temp table in TempDB.

All of our tests in this article involved accessing data in order of the primary key making up the clustered index of our table. In Part 2, we will see how the various types of cursors perform (and how our T-SQL equivalents perform) in accessing data in order of a non-clustered index column and in order of a non-indexed column.

Note: After doing my testing in preparation for this article on cursors, I came upon an article by MVP Hugo Kornelis that he wrote in 2007 that did similar testing on the various types of cursors. His findings coincided with mine. Check it out if you want to get a “sneak peek” at my findings in the second part of this series.

And there was light...

ReplyDeleteExcellent article Brad, now I know a lot more than I thought I knew before. And so many procs I've seen in last few months spring back to my mind. I'll have to look at them again.

Thanks!

Brad,

ReplyDeleteThanks for the detailed post. It is everything I was hoping for and more! The only objection I have is that you are declaring winners by duration alone. IMO duration is not reliable enough metric to declare a "winner". Especially when the number of reads is nearly twice as many, and the CPU is greater for the cursor approach. I would personally name the non-curosr approach the winner, in just about every case, as I would rather see a query do half as many reads and have aproximately the same CPU ,than a query that has a smaller duration.

Thanx Brad, very nice.

ReplyDeletePersonally, i prefer queries because i want to let the server do what it was made to do, work on sets. Also, modifying a query is easy. Modifying a CURSOR, can vary based on what needs to be done, and the understanding of the maintenance coder.

@Piotr:

ReplyDeleteThanks for the feedback... I mainly wanted to write the article so that cursors could at least be better understood, and I wanted that extra knowledge to help us at least look at them from a different angle.

@Adam:

You're absoolutely right about the "winner" just being based on duration and that other factors are, of course, important things to consider. I didn't emphasize that enough, but it will come through in the next article in the series. We only looked at processing a table in clustered key order... things change dramatically when processing in other orders, which we'll look at next.

@Brian:

I understand about preferring queries. We all do, but again, if we DO need to access rows one at a time, it's best to use the best tool for the job, and that's what cursors were designed for. Based on the stats in this blog entry, even though the STATIC CURSOR beats out all others in terms of duration, one might still opt to use the TOP 1 approach because it is, after all, only 2 seconds slower and has lower CPU, Reads, and Writes (i.e. none). But stay tuned for Part 2.

--Brad

Awesome article Brad. I was always under the impression that FAST_FORWARD is your best choice, but after read your article and testing your samples, validating the plans - i am enlightened. Thanks.

ReplyDeleteThis is correct, until you put your tempdb on a SAN and have an application server connecting remotely to your SQL server. Then you get killed by the overhead of the network and SAN traffic. You can fix this by putting tempdb locally on the SQL server hardware, even better use an SSD foe the tempdb files. Running this test on your laptop or standalone server does not ell the whole story on why cursors are evil.

ReplyDeleteGreat Article, thank you very much for the detailed explanation. It helped me alot.

ReplyDeleteThank you for the feedback! Glad it helped.

ReplyDeleteNice article Brad...

ReplyDeleteThankyou for it. It added some more knowledge into my brain :-)

I've previewed this blog a couple of times. I LIKE it.

ReplyDeleteYou may want to add that the KEYSET cursor is a hybrid between static and dynamic. For instance, you can insert within the KEYSET cursor and see the insert--however, insert outside the cursor and you cannot see the change. As I understand it, data membership within the cursor is dynamic while changes to the data outside the cursor is treated statically...

I am writing this dated comment in the hope that maybe someone struggling with a cursor can find some solace.

ReplyDeleteFrom my professional experience, the "cursors are evil" debate gets the most play in academia... and when less experienced database developers stumble into religious debates on SO without any consideration of their particular situation. In the real world you don't get paid to avoid cursors. You get paid to deliver solutions on time and on budget.

Seasoned professionals know there are serious pros and cons to ponder when deciding cursors versus set-based operations .... particularly in complex real-world situations.

The use of cursors usually boils down to performance. Ironically, computer science students are taught that accuracy always trumps performance tuning. Once in the business world, no one wants to solve theoretical performance issues in some piece of code that in the end no one cares about. Even when performance tuning is needed, managers must weigh the lifecycle costs of simple, readable maintainable code versus a blazing fast but arcane implementation that meets a particular performance requirement.

Yet all of that gets tossed out the window by the database community.

The main argument AGAINST cursors (except in trivial situations) of course is performance, even though technologies such as snapshot isolation largely addressed earlier database locking issues etc. Besides, as the author points out, the database may end up doing row-wise operations anyway. By framing the problem in terms of sets, you are giving the database the opportunity to work a faster solution but it is not guaranteed. The main argument FOR cursors is readability, which is exactly why so many beginner developers gravitate towards them.

I once sat in an advanced computer science class at Carnegie-Mellon with a top MIT professor who horrified the audience by presenting a single line goto statement as an incredibly simple, safe and elegant way to solve a really nasty 3GL programming problem. The room was stunned but his message was clear - never forget the big picture.

If the problem is complex, the data is messy, and/or the risk of trashing data is unacceptable, I almost always start with a cursor then consider removing as performance requirements dictate. A coding bug in a set-based operation can inflict catastrophic damage whereas a slow-running row-based operation is more of an annoyance. The other major consideration is that cursors are fairly simple to debug e.g. insert row xxx failed due to invalid FK. When a set operation fails, you often have to resort to a cursor anyway to find the problem data.

Anyway, that's my two cents on the Truth About Cursors.

Great post Brad ! How does the performance of tempdb disk compare to the disk on which your database is hosted? The differences noticed in time when you emulate the behavior of cursor could be due to that.

ReplyDeleteThanks for your feedback, Anonymous.

ReplyDeleteTempDB location/performance can certainly influence the results. My testing for this blog post was just done on my laptop, where my test database and TempDB were in the same physical location.

I was looking all over for this information. Thanks Brad. I was trying to figure out why a terrible performance problem that was showing a long running fetch suddenly improved dramatically when I cleared the cache. No fetch showed up as long running anymore and the user was happy. Now I know that the execution plan was changed for a KEYSET or DYNAMIC cursor. Too bad it is buried in vendor code.

ReplyDeleteIn our case, we have a converted mainframe COBOL program using SQL Server. The COBOL (running on a Windows server) is using nested cursors. Batch jobs can take a long time. The time varies, perhaps because of network latency. I partially converted one of our cursor processes to set based and cut the time from 8 hours to 5 minutes.

ReplyDeleteMaybe the cursor is optimized for 1 record at a time processing while a set-based query requires extra overhead (and buffers) to send any number of records. It's not really fair to compare a cursor to a "set-based" query forced to scan one row at a time - just like a cursor. Why not compare the cursor to a set-based version that gets 1000 at a time and loads them into a app buffer for processing? There's also efficiency in letting SQL Server handle some of the processing (e.g., joins or sub-queries) in memory and avoiding a bunch of network latency.