And what “extra thing” did I give to those queries? I’ll give you a hint: I gave them a hint.

I Want It FAST

For example, there was one procedure that did a little calculation to come up with a particular date, and then it needed to pull out some information from some tables based on a 1-day date range from that date. It was something like this query in AdventureWorks:

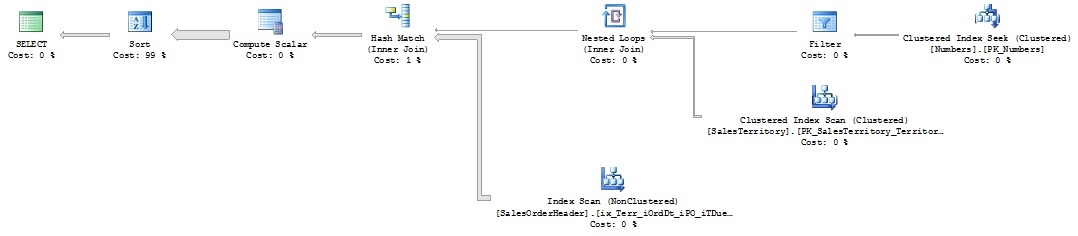

declare @DesiredDateAtMidnight datetime = '20010709'That particular query produces this (actual) execution plan (click on the plan to see the full-size image in a new window):

declare @NextDateAtMidnight datetime = dateadd(day,1,@DesiredDateAtMidnight)

select OrderID=h.SalesOrderID

,h.OrderDate

,h.TerritoryID

,TerritoryName=t.Name

,c.CardType

,c.CardNumber

,CardExpire=right(str(100+ExpMonth),2)+'/'+str(ExpYear,4)

,h.TotalDue

from Sales.SalesOrderHeader h

left join Sales.SalesTerritory t on h.TerritoryID=t.TerritoryID

left join Sales.CreditCard c on h.CreditCardID=c.CreditCardID

where OrderDate>=@DesiredDateAtMidnight

and OrderDate<@NextDateAtMidnight

order by h.SalesOrderID

/*

OrderID OrderDate TerritoryName CardType CardNumber CardExpire TotalDue

------- ---------- --------------- ------------- -------------- ---------- ---------

43728 2001-07-09 Southwest Distinguish 55553397232060 03/2007 3953.9884

43729 2001-07-09 United Kingdom ColonialVoice 77771647096870 03/2006 3756.989

43730 2001-07-09 Southwest Distinguish 55552313505236 09/2007 3756.989

43731 2001-07-09 Australia Vista 11114001441348 11/2006 3953.9884

43732 2001-07-09 Australia Distinguish 55557723662910 11/2007 3729.364

43733 2001-07-09 Northwest SuperiorCard 33338859457470 04/2008 3953.9884

*/

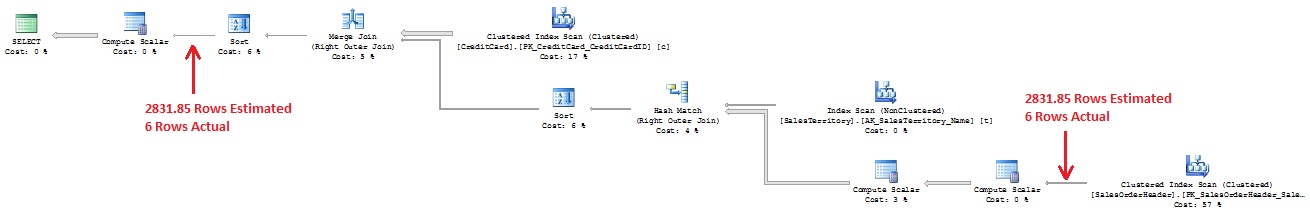

The optimizer decided to approach this by doing a Merge Join between the CreditCard table (which has 19118 rows) and the result of a Join of the Territory and the SalesOrderHeader tables. Note that it had to SORT the Territory/SalesOrderHeader data by CreditCardID so that it could do that Merge Join. And then, after that, it had to SORT the final data by SalesOrderID, because that’s what the ORDER BY clause of the query called for.

Note, also that the optimizer estimated that my WHERE clause would pull 2831.85 rows (out of a total of 31465) from SalesOrderHeader. How did it arrive at this figure? Since the @DesiredDateAtMidnight and @NextDateAtMidnight variables had values that were unknown to the query, it had to make assumptions. The optimizer assumes that equality-based predicates are 10% selective and inequality-based predicates are 30% selective. Since I had two inequality-based predicates, it assumed that together they would be 30% * 30% = 9% selective. And, sure enough, 31465 total rows * 9% = 2183.85 rows.

But the final result of the query was only 6 rows.

This is the situation that I was in with my client’s query. The optimizer grossly over-estimated how many rows it would find, and so it came up with a query that was efficient for handling that many rows. But I knew that a day’s worth of data from the particular tables that I was querying would produce about an average of 10 rows, not thousands of rows.

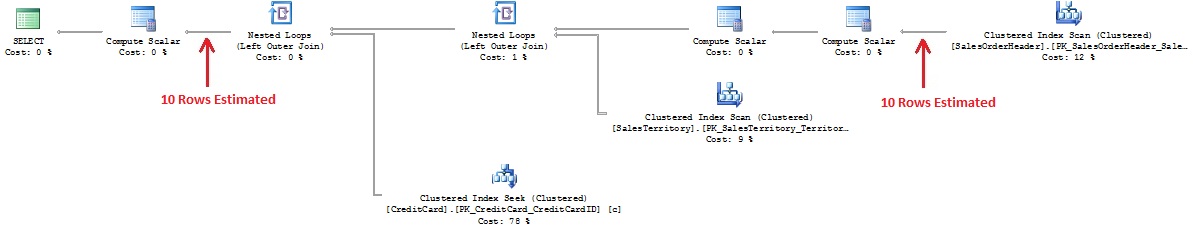

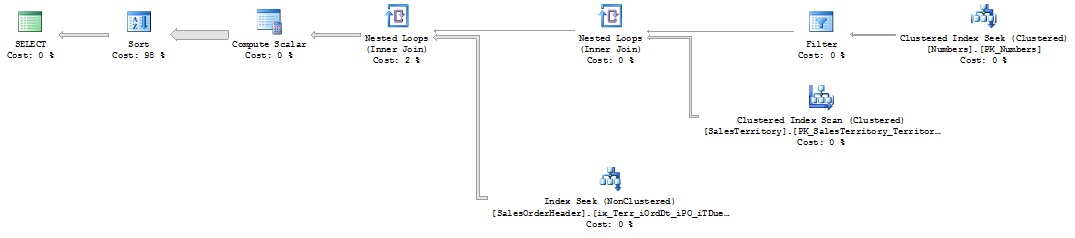

So I gave the optimizer a hint… specifically I gave it a FAST 10 hint, indicating that it should put together a plan that would return 10 rows as fast as possible. Adding OPTION (FAST 10) to the end of our query above produces this (actual) execution plan:

Since the optimizer now knew that only 10 rows were estimated, it could put together a more appropriate plan for that small result. Note that there are Nested Loop Joins rather than a Merge Join and a Hash Join, and there are no more Sort operators, since the main driving table of the query is the SalesOrderHeader Clustered Index, which is already sorted by SalesOrderID.

I could have used the OPTION (RECOMPILE) hint instead, which would allow the optimizer to “sniff” the values of the variables and put together an appropriate plan based on their values, but that would involve having to recompile the plan from scratch every time this procedure was run, and therefore the plan would not go into the cache for others to use. The OPTION (FAST 10) plan, on the other hand, would be cached for others.

The Profiler statistics for the two queries are as follows:

/*

Description CPU Reads Duration QueryCost Relative%

-------------------------------------------------------------

No Hint Provided 63ms 889 288ms 0.9698090 96%

OPTION (FAST 10) 31ms 775 173ms 0.0450217 4%

*/

The CPU is so low for both queries that it’s really meaningless to compare them, but you can see that the Number of Reads and the Duration are lower by introducing the hint. The optimizer figured the second approach was much cheaper, but that’s mainly because, as far as it was concerned, it was comparing a 2184-row query to a 10-row query.In my particular case with the client, the query was a bit more complicated, so the difference in CPU and Reads was much more dramatic. By adding the FAST 10 hint, the CPU was decreased by 60% and the Reads were decreased by 75%.

Using the FORCE

Another query that I wanted to tune incorporated an array-splitting (inline) table-valued function that used a Numbers table.

Let’s put together an example in AdventureWorks to illustrate what I was dealing with. First, create a Numbers table consisting of the integers from 1 to 1,000,000 and create a clustered index on it:

;withNext, create an inline table-valued function that would use that Numbers table to split a comma-delimited list of integers:

L0(c) as (select 0 from (values (0),(0),(0)) x(c)) --3 Rows

,L1(c) as (select 0 from L0 a, L0 b, L0 c) --27 Rows (3x3x3)

,L2(c) as (select 0 from L1 a, L1 b, L1 c) --19683 Rows (27x27x27)

,L3(c) as (select 0 from L2 a, L2 b) --387,420,489 Rows (19683x19683)

,NN(n) as (select row_number() over (order by (select 0)) from L3)

select Number=isnull(convert(int,n),0) --Force it to be a "not null" column

into dbo.Numbers

from NN

where n<=1000000

go

alter table dbo.Numbers

add constraint PK_Numbers

primary key clustered (Number)

with (fillfactor=100)

create function dbo.ufn_SplitIntArrayYou can see how this function is used in the example below:

(

@List varchar(max)

,@Delimiter varchar(10)

)

returns table

as

return

select Item=convert(int,String)

from dbo.Numbers with (nolock)

cross apply (select ItemPos=Number) F1

cross apply (select DelimPos=charindex(@Delimiter,@List+@Delimiter,ItemPos)) F2

cross apply (select String=rtrim(ltrim(substring(@List,ItemPos,DelimPos-ItemPos)))) F3

where ItemPos<=convert(int,len(@List))

and substring(@Delimiter+@List,ItemPos,1)=@Delimiter

select *Now that we have that in place, we can see a query that makes use of this function. The following will take a list of TerritoryID’s and will list the SalesOrderHeader rows that are in those Territories, along with the Territory Description:

from dbo.ufn_SplitIntArray('123,456,789',',')

/*

Item

----

123

456

789

*/

declare @TerritoryList varchar(max) = '2,3,5'And here is the (actual) execution plan for that query:

select h.SalesOrderID

,h.OrderDate

,t.TerritoryID

,TerritoryName=t.Name

,h.PurchaseOrderNumber

,h.TotalDue

from dbo.ufn_SplitIntArray(@TerritoryList,',') a

join Sales.SalesTerritory t on a.Item=t.TerritoryID

join Sales.SalesOrderHeader h on t.TerritoryID=h.TerritoryID

order by h.SalesOrderID

/*

SalesOrderID OrderDate TerritoryID TerritoryName PurchaseOrderNumber TotalDue

------------ ---------- ----------- ------------- ------------------- ----------

43659 2001-07-01 5 Southeast PO522145787 27231.5495

43660 2001-07-01 5 Southeast PO18850127500 1716.1794

43667 2001-07-01 3 Central PO15428132599 8095.7863

...

74193 2004-07-02 5 Southeast NULL 43.0729

74548 2004-07-13 5 Southeast NULL 38.675

75103 2004-07-31 5 Southeast NULL 44.1779

(1223 rows total)

*/

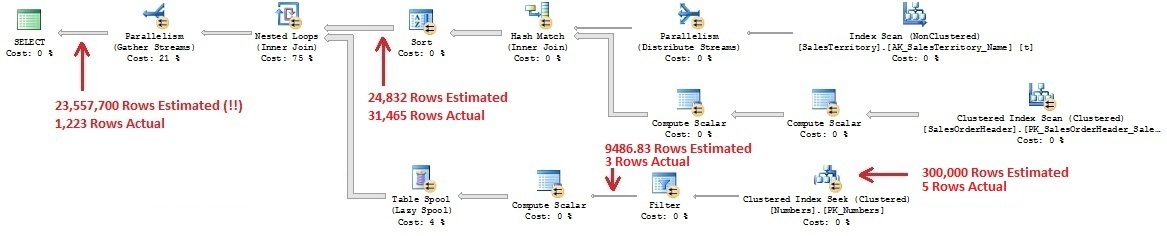

Notice that Parellelism is involved in this plan. The optimizer figured our query was so expensive that it decided it was cheaper to spread the burden of the query across the 4 processors in my system.

As far as how to attack the query, the optimizer put together a plan that starts by scanning the SalesTerritory table and Hash Joins it with the SalesOrderHeader table, resulting in an estimated 24,832 rows.

It also scans the Numbers table, first satisfying the predicate of

where Number<=convert(int,len(@TerritoryList))Since the optimizer has no idea what is in @TerritoryList, it makes an inequality-based assumption that the predicate will have a selectivity of 30%, and therefore it estimates that it will produce an estimated 1,000,000 * 30% = 300,000 rows. In reality, it only produced 5 rows.

Then it applies a filter to those rows to pull the positions of the comma-delimited numbers in @TerritoryList… in other words a filter to satisfy the predicate of

where substring(','+@TerritoryList,Number,1)=','It estimates that those 300,000 rows will filter down to 9486.83 (I have no idea how it arrived at this 3.16% selectivity figure). In reality, it only produced 3 rows. Finally, the optimizer plans on storing those rows into a Spool for repeated access by the Nested Loops operator.

Now the Nested Loops operator will match up each of the estimated 24,832 rows with each of the estimated 9486.83 rows from the spool and will find the ones that satisfy this JOIN predicate (which is implied by incorporating our inline table-valued function in our query):

convert(intSince that’s an equality predicate, it assumes 10% selectivity, so it comes up with a final estimated result of 24,832 * 9486.83 * 10% = 23,557,700 rows for the final result. Wow! In reality, the Nested Loops will match 31,465 rows with the 3 rows in the spool (producing 94,395 matches) and end up only finding 1223 that satisfy the JOIN predicate (a selectivity of only 1.3%).

,rtrim(ltrim(substring(@TerritoryList

,Number

,charindex(',',@TerritoryList+',',Number)-Number

)

)

)

) = SalesTerritory.TerritoryID

The bottom line here is that the optimizer got scared by that million-row table of Numbers and grossly overestimated how many values it would pull from that table and so put together a plan to most efficiently handle a final product of over 23 million rows. That’s why it started by processing the 31,465-row SalesOrderHeader table and spooled the estimated 9486.83 rows from the Numbers table that it would match up with each of those.

But we know that, in reality, our @TerritoryList will only consist of a small handful of comma-delimited values, so the plan should ideally start with the Numbers table, producing the positions of the values in @TerritoryList and, for each of those values, look them up in the SalesTerritory and then find the rows in SalesOrderHeader with that TerritoryID.

In other words, it should process the plan in exactly the order that I specified in the query:

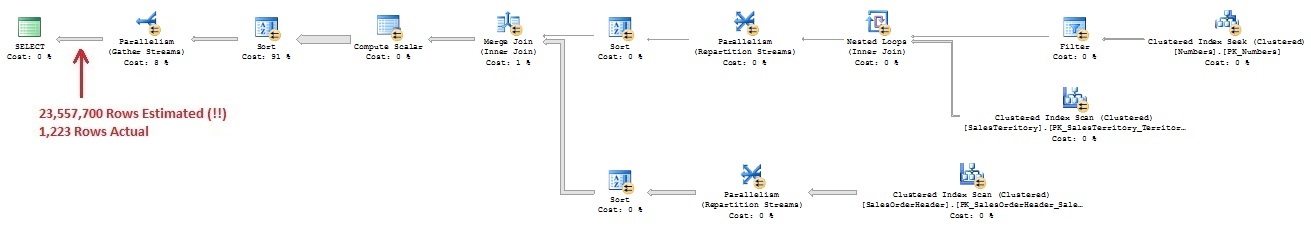

from dbo.ufn_SplitIntArray(@TerritoryList,',') aWell, there’s a hint for that! If we add an OPTION (FORCE ORDER) to our query, we end up with a completely new (actual) execution plan:

join Sales.SalesTerritory t on a.Item=t.TerritoryID

join Sales.SalesOrderHeader h on t.TerritoryID=h.TerritoryID

And here are the statistics that Profiler gives us in comparing the two plans:

/*

Description CPU Reads Duration QueryCost Relative%

----------------------------------------------------------------

No Hint Provided 1499ms 63,783 854ms 1167.11 27%

FORCE ORDER 141ms 3,660 314ms 3149.68 73%

*/

Nothing changed in what the optimizer estimates… it still thinks the query will produce 23 million rows. And it obviously thinks we’re completely insane to do a FORCE ORDER, because it estimates that the cost will almost triple by doing it this way. But you and I know better, and by introducing that hint, we decreased the CPU and Reads dramatically.But this new plan still has Parallelism in it. The optimizer thought we needed it because it thinks the cost is so ridiculous that it will be cheaper to split up the query among the 4 processors in my computer. And, interestingly enough, it figures that by doing that split, it can introduce Sort operators to order the two main streams by TerritoryID and do a Merge Join, and then turn around and do a Sort on SalesOrderID for the final result.

Well, we know that our final result is not going to be that large… it’s certainly not going to be anywhere even close to 23 million rows! So let’s just get rid of the Parallelism. We’ll add one additional hint (MAXDOP 1) to our query in order to force it to only use one processor:

option (force order, maxdop 1)Here’s the (actual) execution plan that results from that hint:

See how simple that new plan is? Now how does that compare to the previous two plans?:

/*

Description CPU Reads Duration QueryCost Relative%

--------------------------------------------------------------------

No Hint Provided 1499ms 63,783 854ms 1167.11 12%

FORCE ORDER 141ms 3,660 314ms 3149.68 31%

FORCE ORDER, MAXDOP 1 31ms 741 175ms 5788.61 57%

*/

The optimizer thinks we’ve completely lost our mind, since it estimates the Cost is 5788.61… over 5 times more expensive than the plan it would have produced without hints. It’s probably snickering… laughing behind our backs and thinking, “Your stupid plan with hints will take over an hour and a half to run, but if you let me do my job, I can do it all in only 20 minutes!”But that couldn’t be further than the truth. By introducing the hints, we reduced the CPU by 98% and reduced the Reads by 99%.

One More LOOPy Enhancement

But wait! We can even do better!

The plan still involves a Clustered Index Scan of the entire SalesOrderHeader table. If we can create an index on TerritoryID and INCLUDE the other columns required by the query, then we could SEEK into that index by TerritoryID rather than SCANning it.

So let’s create an index… Note that the TotalDue column in SalesOrderHeader is actually a computed column based on SubTotal and TaxAmt and Freight, so we have to INCLUDE those columns:

create index ix_Terr_iOrdDt_iPO_iTDueNow let’s run our query (with our two hints) and see if that index made a difference in the plan:

on Sales.SalesOrderHeader

(TerritoryID)

include (OrderDate,PurchaseOrderNumber,SubTotal,TaxAmt,Freight)

Hmmm… the only thing that changed was that it was SCANning our new index rather than SCANning the Clustered Index. That improves the query somewhat, decreasing the number of reads, because our new index is smaller in terms of number of pages:

/*

Description CPU Reads Duration

-----------------------------------------------

No Hint Provided 1499ms 63,783 854ms

FORCE ORDER 141ms 3,660 314ms

FORCE ORDER, MAXDOP 1 31ms 741 175ms

Add New Index 31ms 232 172ms

*/

But we created that index to take advantage of the TerritoryID. We wanted it to SEEK into the index rather than SCAN the entire thing.So another hint to the rescue!

This time we will tell the optimizer that we only want it to use LOOP JOINs. So here’s our new query with all the hints involved:

declare @TerritoryList varchar(max) = '2,3,5'And here’s the new (actual) execution plan that it produces:

select h.SalesOrderID

,h.OrderDate

,t.TerritoryID

,TerritoryName=t.Name

,h.PurchaseOrderNumber

,h.TotalDue

from dbo.ufn_SplitIntArray(@TerritoryList,',') a

join Sales.SalesTerritory t on a.Item=t.TerritoryID

join Sales.SalesOrderHeader h on t.TerritoryID=h.TerritoryID

order by h.SalesOrderID

option (force order, maxdop 1, loop join)

Note that we now have a Nested Loops Join that now SEEKs into our new index. And the results?

/*

Description CPU Reads Duration

-----------------------------------------------

No Hint Provided 1499ms 63,783 854ms

FORCE ORDER 141ms 3,660 314ms

FORCE ORDER, MAXDOP 1 31ms 741 175ms

Add New Index 31ms 232 172ms

Add LOOP JOIN Hint 16ms 69 144ms

*/

Hah! So we’ve ultimately decreased the Reads by 99.9% from 63,783 down to a measly 69. That’s the way it should be with a straightforward query like this one.Thank goodness for the existence of hints. They’re one more effective tool we can use to

Gold:

ReplyDelete“Your stupid plan with hints will take over an hour and a half to run, but if you let me do my job, I can do it all in only 20 minutes!”

Time to get out my Great-post-as-usual stamp.

Great post as usual Brad!

I've always been worried about telling the optimizer that I know better. I'm always afraid that 5 months down the lines. Data distribution in tables and parameters change, everything goes downhill and then the optimizer says "told you so"

Fun fact: "Timeout expired. The timeout period elapsed..." is ADO.net speak for "told you so"

@Michael:

ReplyDeleteThanks for the comments, as always.

I understand your worry about "telling the optimizer that I know better." The FAST 10 example that I gave certainly has the potential to not be scalable some time down the road as a business potentially grows.

But the second example with the Numbers table is a different kind of animal.

Stay tuned for my next post... I think you'll get a kick out of it.

--Brad

Great article Brad!

ReplyDeleteI agree with both of you guys on this one. While hints, can make queries magnitudes faster the potential for havoc down the road can be somewhat high. As Michael said, it depends a lot of the distribution of data, among other factors. I have been on the bad side of the fence a few times with this. I have had problems with developers using join hints (like loop) and index hints. What works well today may not work well in 6 months. The key is to use hints with caution and you have to continuously monitor them because any shift could potentially make performance magnitudes worse.

Another thing of note is the option fast option may return the n number of rows faster, but the remaining rows may be returned more slowely than if you had not specified the fast hint. This option should too be handled with care, as it too is a double-edged sword.

@Brad,

ReplyDeleteI enjoyed your post. I happen to be researching this behavior as well and one thing I noticed was that SQL Server 2005 and 2008 have pretty noticeable differences in cardinality estimation.

In particular I have an example where in SQL2k8, the inner join following the number split function uses 10% equi-join estimation per (execution), and in SQL2k5, it uses some other factor (a lot more selective). Net result being a large difference in row estimations at the end, which in my case led to extreme differences in memory grant requests.

@BradK:

ReplyDeleteAll my tests were done in SQL2008. But I can verify that doing the same thing in SQL2005 does, in fact, bring about a smaller estimated row set.

Instead of the 23,557,700 rows that SQL2008 estimates, SQL2005 estimates only 13,423,100 rows, which is about 5.8% (instead of 10%).

And, as you say, the memory grant is much smaller (only 704K vs 11264K) because fewer (estimated) rows have to be sorted.

Thanks for adding that additional info! Interesting...

--Brad

Nice post Brad, showing the optimiser who's the boss eh

ReplyDeleteJohn Alan

Great Article Brad. I didn't have any knowledge on query hint and after reading this article, i realize the use of hint on performance tuning queries.

ReplyDeleteThanks for sharing your knowledge.