/*

Description CPU Reads

----------------------------------------------

SQL2008:

STATIC Cursor 23906ms 6,061,368

Emulating STATIC Cursor 23849ms 3,031,241 (Why is this half the reads???)

SQL2005:

STATIC Cursor 22378ms 6,107,958

Emulating STATIC Cursor 25402ms 6,128,352 (Reads are on par, but CPU higher)

*/

Why the heck is this? Doesn’t it seem a little weird that our T-SQL Code to emulate a STATIC Cursor only has half the Logical Reads of an actual STATIC Cursor? That’s what the figures show in SQL2008, but not in SQL2005.I’ll take you now on my journey in trying to track this down.

Again, here is the code to create our million-row table:

if object_id('TestTable','U') is not null drop table TestTableLet’s just examine the population phase of the cursor and our emulation. We’ll execute code to OPEN a STATIC cursor in clustered key order (ID), so that it does the population of its temporary table, and then just CLOSE it up again. And, in addition, we’ll execute T-SQL code that emulates that same population phase of the STATIC cursor:

go

create table TestTable

(

ID int identity(1,1) primary key

,Column1 varchar(50)

,Column2 varchar(50)

)

go

with

L0(c) as (select 0 from (values (0),(0),(0)) x(c)) /* 3 Rows */

,L1(c) as (select 0 from L0 a,L0 b,L0 c) /* 27 Rows=3x3x3 */

,L2(c) as (select 0 from L1 a,L1 b,L1 c) /* 19683 Rows=27x27x27 */

,L3(c) as (select 0 from L2 a,L2 b) /* 387,420,489 Rows=19683x19683 */

,NN(n) as (select row_number() over (order by (select 0)) from L3)

insert TestTable (Column1,Column2)

select newid(),newid()

from NN

where n<=1000000

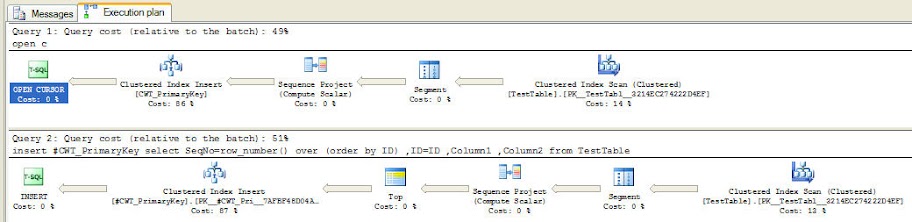

/* STATIC Cursor Population */And here is the Actual Execution Plan for those:

declare c cursor local forward_only static read_only

for select ID,Column1,Column2

from TestTable

order by ID

open c

close c

deallocate c

/* T-SQL Code to do the same kind of population */

if object_ID('tempdb..#CWT_PrimaryKey','U') is not null drop table #CWT_PrimaryKey

create table #CWT_PrimaryKey (SeqNo bigint primary key

,ID int

,Column1 varchar(50)

,Column2 varchar(50))

insert #CWT_PrimaryKey

select SeqNo=row_number() over (order by ID)

,ID=ID

,Column1

,Column2

from TestTable

You’ll notice that they are exactly identical (except for the ROWCOUNT TOP operator that got thrown in the manual-population query)… Interestingly, the optimizer estimated the relative costs at 49%:51%, figuring the cursor had the edge.

But what are the Profiler figures for the two processes (in SQL2008)?

/*

Description CPU Reads Writes Duration

-------------------------------------------------------------

STATIC Cursor OPEN 5593ms 3,061,364 11,816 6844ms

INSERT #CWT SELECT 2188ms 29,814 12,375 9438ms

*/

Whoa! Wait a second here! The cursor generated 3 million reads and our manual INSERT only generated 30,000? And the CPU time for the cursor was more than twice that for the manual INSERT. (Despite all that, the STATIC Cursor still wins in terms of Duration time… go figure).Logical Reads are counted when you SELECT rows from a table, but they are also counted when you INSERT rows into a table… especially a table with a clustered index, because it has to read pages in the index to determine where to insert each row, and it has to read the data page to put information about it into the Transaction Log. Both of our approaches read a million rows from our table, and they both created a million rows in a temporary table with a clustered index. Why the enormous difference in Logical Reads?

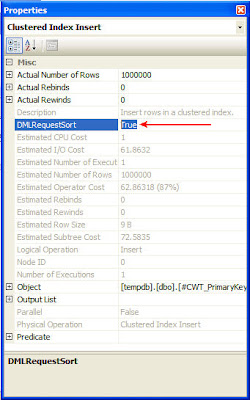

After doing some digging, I traced it down to this… Look at the Properties Window for the Clustered Index Insert Operator in our manual INSERT process:

There’s this mysterious DMLRequestSort property and it’s set to True. On the other hand, the same Clustered Index Insert Operator in our Cursor plan has it set to False.

What if we didn’t INSERT those million rows into a temporary table whose name started with ‘#’? What if we just INSERTed into a table in TempDB that had a regular name that could be accessible by any other session?

if object_ID('tempdb..xCWT','U') is not null drop table tempdb..xCWTHow does it compare with our other two approaches (in SQL2008)?

create table tempdb..xCWT (SeqNo bigint primary key

,ID int

,Column1 varchar(50)

,Column2 varchar(50))

insert tempdb..xCWT

select SeqNo=row_number() over (order by ID)

,ID=ID

,Column1

,Column2

from TestTable

/*

Description CPU Reads Writes Duration

-------------------------------------------------------------

STATIC Cursor OPEN 5593ms 3,061,364 11,816 6844ms (DMLRequestSort=False)

INSERT #CWT SELECT 2188ms 29,814 12,375 9438ms (DMLRequestSort=True)

INSERT xCWT SELECT 8203ms 3,078,430 12,400 12938ms (DMLRequestSort=False)

*/

Well, isn’t that fascinating? It seems that SQL Server turns off the Logical Read count (via this DMLRequestSort property) when you are INSERTing into a temporary table (prefixed with ‘#’) clustered index. And it’s doing this because we are INSERTing the rows in the actual order of the clustered index (because we’re populating its primary key (SeqNo) with a ROW_NUMBER() function).Take a look at the output from SET STATISTICS IO (which, by the way, doesn’t log as many reads as Profiler):

/*

STATIC Cursor:

Table 'Worktable'. Scan count 0, logical reads 3015959,...

Table 'TestTable'. Scan count 1, logical reads 11407,...

INSERT #CWT SELECT:

Table 'TestTable'. Scan count 1, logical reads 11407,...

INSERT xCWT SELECT:

Table 'xCWT'. Scan count 0, logical reads 3023334,...

Table 'TestTable'. Scan count 1, logical reads 11407,...

*/

All three approaches read from TestTable (11407 logical reads), and about 3 million reads are generated when INSERTing into the target table… but not for our INSERT into our temp table that’s prefixed with a ‘#’ character!! It doesn’t register any Reads in the target table whatsoever!I couldn’t find anything in Books Online… or anywhere on the web whatsoever… about this DMLRequestSort property at all. The only two specific mentions of it were in a MSFT Connect Item Bug Report (the bug had to do with INSERTing into a table variable) and in a French SQL Forum (and that only existed because MVP David Barbarin posted it earlier this month to ask around about the property for me), but that was it… No information on what it is or what it indicates.

(Of course, now this article you’re currently reading will bring the specific web mention count up to three). 8^)

I suspected that it had to do with logging. Since the optimizer senses that we are inserting rows into the temporary table clustered index in order of the clustered index key, I figured it must somehow do it in bulk and minimally log it. It only does this with a temp table that is local to us (i.e. prefixed with ‘#’)… it does not do it for a table that can be accessible by another session.

But if that’s the case, then the cursor is getting shortchanged here, dontcha think? The CWT_PrimaryKey table that it populates is also a table that is local to us… it can’t be accessed by another session… heck, it can’t even be accessed directly by us, except indirectly via the FETCH command. It seems to me that it should be populated in bulk with minimal logging as well. But it’s not.

If my suspicions about minimal logging were correct, that would also explain why the CPU is lower in our manual INSERT code… minimal logging equals less CPU. And that’s why our emulation code always won in our tests in terms of Logical Reads and CPU time.

I wanted to do some more digging and convince myself that minimal logging was, in fact, occurring.

So I put together the following code to insert a quantity of rows into a temp table (prefixed with ‘#’) and then look at the Transaction Log (via the sys.dm_tran_database_transactions DMV) to see what got put into it:

/* How many rows shall we insert? */I ran this for various quantities of rows from 100 on upward, recording the Transaction Log figures. And then I ran the same code, but this time for a table in tempdb without a ‘#’ prefix to its name.

declare @RowsToInsert int = 1000

/* Begin the transaction */

begin transaction

/* Create the table and Insert the requested number of rows */

if object_ID('tempdb..#CWT_PrimaryKey','U') is not null drop table #CWT_PrimaryKey

create table #CWT_PrimaryKey (SeqNo bigint primary key

,ID int

,Column1 varchar(50)

,Column2 varchar(50))

set rowcount @RowsToInsert

insert #CWT_PrimaryKey

select SeqNo=row_number() over (order by ID)

,ID=ID

,Column1

,Column2

from TestTable

set rowcount 0

/* Look at the Transaction Log data */

select database_transaction_log_record_count

,database_transaction_log_bytes_used

from sys.dm_tran_database_transactions

where database_id=db_id('tempdb')

and database_transaction_begin_time is not null

/* Close the transaction */

commit

Here is a chart showing Log Records created for various quantities of inserted rows from 100 to 1000:

Both generated Log Records in a consistent manner for inserted rows up to 250. And then at 251 inserted rows, the Transaction Log Records count fell off a cliff for the table with a ‘#’ prefix. This is where the DMLRequestSort property kicked in… It was False when inserting 100 rows and for inserting 200 rows and for inserting 250, but when I inserted 251 rows (and any number above that), it turned to True, and the logging became minimal. It seems 251 rows is the “tipping point” for the rowsize of the data that I was inserting. It would be a different number of rows for other rowsizes… but I haven’t taken the time to figure out how that “tipping point” is calculated.

The same phenomenon continues… Here is a chart for inserted rows from 1000 to 10000:

And so on and so on and so on up to a million rows. When I insert 1 million rows into a temp table without a ‘#’ prefix, it creates 1,000,078 Log Records. But inserting rows into the temp table with a ‘#’ prefix never brings the Log Record Count higher than 400 or so.

So it looks like this DMLRequestSort=True phenomenon is a nice shortcut introduced in SQL2008 to cut down on logging and therefore Reads and CPU.

But it doesn’t get applied to STATIC (or KEYSET) cursors that create a temp table of data when they are OPENed.

Once again, the unfortunate cursors are shunned and are not invited to the optimization party.

Once again, the unfortunate cursors are shunned and are not invited to the optimization party.Poor cursors… sniff…

They always get the short end of the stick… sob…

I sincerely hope you enjoyed this series on cursors.

Admit it… you probably hated cursors with a passion before you started reading the series, but then, as you grew to understand them and how they worked and performed in Parts 1 and 2, you gained a little bit of appreciation and respect for them, and now, at the end of this final installment in the series, you perhaps feel a little empathy and compassion towards them.

Right?

Well, if so, then my work is done…

…And there’s no need to mention the #&*@ things in my blog ever again.

Thanks for posting this series Brad, it's been really informative and entertaining at the same time! :-)

ReplyDeleteGreat series. I enjoyed the investigation in Part 3 especially!

ReplyDeleteHey Brad,

ReplyDeleteThe lower number of logical reads and the minimal logging you see with the temporary table is due to the new capability of SQL Server 2008 to do minimally-logged INSERT...SELECT statements.

To achieve minimal logging to a table with a clustered index (without enabling TF610) the table must be empty, there must be no non-clustered indexes, a table lock must be taken, and rows must arrive in CX order.

Your local temporary table meets all these requirements (#local tables are private so that meets the TABLOCK restriction).

The DMLRequestSort is simply a plan flag (like Ordered:True) set by the QO to ensure that rows are presented to the Clustered Index Insert iterator in key order, as required for minimal logging.

Enabling TF610 would allow minimal logging to the 'real' table in your example.

Minimal logging (with or without TF610) does not apply to populating a cursor worktable.

Thanks for a great read, as usual!

Paul

www.sqlblog.com/blogs/paul_white

@Paul:

ReplyDeleteThanks so much for the extra detail. Excellent stuff!

And, by the way, you've got an outstanding blog at SQLBlog.com. I love all the analysis and digging into the optimizer. Keep up the great work!

--Brad

Brad,

ReplyDeleteGosh! Thanks so much - I had no idea I had such an illustrious reader!

Your blog is a firm favourite of mine - not sure how I managed to miss this post until today.

Paul

Good post. I recently (finally) got 2K8 and this post gave me something good to test. The difference is remarkable in a good way here just like the difference was in a bad way with the data mismatch you demonstrated on the "Fable" thread.

ReplyDeleteThanks.

I always find it perplexing when people say things like "never use rowids or cursors" and then we re-discover that the DBMS uses them behind the scenes because... what else is it going to use? ESP? I think that the bias against cursors is just like any other bias. I recall arguing with developers about this and then when they realized how my applications worked, they said, "Oh..." (I created applications that were not dependent on the schema or DBMS vendor.)

ReplyDeleteJust read the three parts, and WOW I passed 8 years late. The information still holds.

ReplyDeleteNice work Brad, well presented.

This is an interesting exploration of the differences in performance between SQL versions.

ReplyDelete