I came across a couple of incidents in the last few weeks at the MSDN T-SQL Forum that are good illustrations of why it’s important to look under the hood and investigate query plans and see what’s really going on with the optimizer.

I came across a couple of incidents in the last few weeks at the MSDN T-SQL Forum that are good illustrations of why it’s important to look under the hood and investigate query plans and see what’s really going on with the optimizer.Both of these incidents involve the way that the optimizer translates certain language elements.

The first of these, involving the COALESCE() function, is fairly straightforward, and it has been talked about in other blogs.

But the behavior in the second incident, involving multiple NOT EXISTS predicates, came as a big surprise to me and others, and it uncovered other unexpected phenomena.

COALESCE() ==> CASE Expression

In a message in the forum a few weeks ago, someone wanted to demonstrate how to calculate running totals. Here’s an example of the type of query he posted, calculating running totals of the OrderQty column by SalesOrderID in the SalesOrderDetail table in AdventureWorks:

select SalesOrderIDSo, for each row, the running total is calculated by taking the current row’s OrderQty amount and adding it to the result of a scalar subquery which totals the OrderQty values of all previous rows for that order (when sorted by SalesOrderDetailID). If the row happens to actually be the very first row of the order, then performing the SUM() in the subquery would produce a NULL value (because there were no previous rows), and therefore he used COALESCE() to ensure that a zero non-NULL value would be produced.

,SalesOrderDetailID

,ProductID

,OrderQty

,QtyRunTot=OrderQty

+coalesce((select sum(OrderQty)

from Sales.SalesOrderDetail

where SalesOrderID=sod.SalesOrderID

and SalesOrderDetailID<sod.SalesOrderDetailID)

,0)

from Sales.SalesOrderDetail sod

The logic is sound; however, if you ever looked under the hood and investigated any query plan involving COALESCE(), you would notice that the optimizer simply translates it into a CASE Expression. For example, this simple COALESCE() query…

select ProductID…is translated by the optimizer into the following:

,Color=coalesce(Color,'None')

from Production.Product

select ProductIDSo what does that mean for our running totals query? It’s translated into this:

,Color=case when Color is not null then Color else 'None' end

from Production.Product

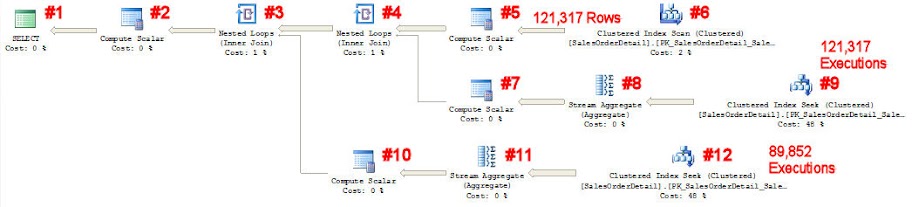

select SalesOrderIDAnd that means that, for each row, a subquery is going to be run to check for NULL-ness, and then, if it doesn’t produce a NULL value, then the same subquery is going to be run again to actually produce the mathematical SUM. Take a look at the actual execution plan for the COALESCE() query (click on the picture for to see an enlarged image):

,SalesOrderDetailID

,ProductID

,OrderQty

,QtyRunTot=OrderQty

+case

when (select sum(OrderQty)

from Sales.SalesOrderDetail

where SalesOrderID=sod.SalesOrderID

and SalesOrderDetailID<sod.SalesOrderDetailID) is not null

then (select sum(OrderQty)

from Sales.SalesOrderDetail

where SalesOrderID=sod.SalesOrderID

and SalesOrderDetailID<sod.SalesOrderDetailID)

else 0

end

from Sales.SalesOrderDetail sod

For each of the 121,317 Rows scanned in #6, the SUM() subquery is calculated by operators #7, #8, and #9. If that value is not NULL, then the SUM() subquery is calculated again by operators #10, #11, and #12. You can see that the SEEK operator #9 is executed 121,317 times… once for each row in the SalesOrderDetail table. The SEEK operator #12 is executed 89,852 times… that comes out to 121317 - 89852 = 31465 times that it was NOT executed because #7, #8, #9 returned a NULL value. Only the first line item in an order would return a NULL value, and, sure enough, there are 31465 orders in the system (and therefore 31465 “first line items”).

But what a waste of resources, repeating the SEEKing and aggregation for the majority of the line items! And the really ironic part about this whole thing is what is behind the Compute Scalar operators #7 and #10. If you look at them, they are actually calculating this:

case when count(*)=0 then null else sum(OrderQty) endYep, if no line items were processed by the Stream Aggregate operators (#8 and #11), then the Compute Scalar operators #7 and #10 purposely set the SUM() equal to NULL. And ironically, we have to check for that NULL and COALESCE() it back into a zero. It’s like we’re working at cross purposes.

Anyway, we can eliminate the repetition and waste in the query plan in a couple of ways. The easiest way is to use T-SQL’s proprietary ISNULL() function instead of (the ANSI-standard function) COALESCE():

select SalesOrderIDOr you could re-write the query so that the SUM() subquery is inclusive of the current row… Note how the subquery below now has a less-than-or-equals sign rather than a less-than sign… This way no checking for NULL is required because the subquery will always process at least one row and therefore return a non-NULL value:

,SalesOrderDetailID

,ProductID

,OrderQty

,QtyRunTot=OrderQty

+isnull((select sum(OrderQty)

from Sales.SalesOrderDetail

where SalesOrderID=sod.SalesOrderID

and SalesOrderDetailID<sod.SalesOrderDetailID)

,0)

from Sales.SalesOrderDetail sod

select SalesOrderIDSo now that you know how COALESCE() is translated behind the scenes, you know that it is most likely not a good idea to apply it to scalar subqueries.

,SalesOrderDetailID

,ProductID

,OrderQty

,QtyRunTot=(select sum(OrderQty)

from Sales.SalesOrderDetail

where SalesOrderID=sod.SalesOrderID

and SalesOrderDetailID<=sod.SalesOrderDetailID)

from Sales.SalesOrderDetail sod

NOT EXISTS ==> LEFT (ANTI SEMI) JOIN

Someone recently posted a message on the forum regarding multiple NOT EXISTS predicates in his WHERE clause. He noticed that if he moved a NOT EXISTS predicate from the beginning of the WHERE clause to the end of the clause (after other NOT EXISTS predicates), the query would improve its performance considerably.

This seemed strange. It shouldn’t matter what order you specify your conditions in a WHERE clause, because the optimizer should figure out the most cost-effective way to apply the various predicates.

Right?

Well… er… no.

What he said was absolutely true, which I can demonstrate here.

First, create 3 tables… a Customer table, and two child tables called CustHugeRowSize and CustTeenyRowSize. All three tables have clustered indexes with CustomerID as the primary column of the index:

if object_id('TempDB..#Customers','U') is not null drop table #CustomersNow populate the Customer table with 100,000 rows, and populate the two child tables with 3 rows for each of those customers… with the exception of CustomerID 98765:

go

create table #Customers

(

CustomerID int primary key

,Name varchar(30)

)

go

if object_id('TempDB..#CustHugeRowSize','U') is not null drop table #CustHugeRowSize

go

create table #CustHugeRowSize

(

CustomerID int

,SeqID int identity(1,1)

,Filler char(3500) default replicate('*',3500)

)

go

create unique clustered index PK_CustHuge on #CustHugeRowSize(CustomerID,SeqID)

go

if object_id('TempDB..#CustTeenyRowSize','U') is not null drop table #CustTeenyRowSize

go

create table #CustTeenyRowSize

(

CustomerID int

,SeqID int identity(1,1)

,Junk bit default 0

)

go

create unique clustered index PK_CustTeeny on #CustTeenyRowSize(CustomerID,SeqID)

go

withMake sure the statistics are completely up-to-date in both child tables:

L0(c) as (select 0 union all select 0 union all select 0) --3 Rows

,L1(c) as (select 0 from L0 a cross join L0 b cross join L0 c) --27 Rows (3x3x3)

,L2(c) as (select 0 from L1 a cross join L1 b cross join L1 c) --19683 Rows (27x27x27)

,L3(c) as (select 0 from L2 a cross join L2 b) --387,420,489 Rows (19683x19683)

,NN(n) as (select row_number() over (order by (select 0)) from L3)

insert #Customers (CustomerID,Name)

select n,'Customer Number '+ltrim(str(n))

from NN

where n<=100000

insert #CustHugeRowSize (CustomerID)

select CustomerID

from #Customers

cross join (select 1 union all select 2 union all select 3) X(N)

where CustomerID<>98765

insert #CustTeenyRowSize (CustomerID)

select CustomerID

from #Customers

cross join (select 1 union all select 2 union all select 3) X(N)

where CustomerID<>98765

update statistics #CustHugeRowSize with fullscanAnd now let’s try two queries… both have two NOT EXISTS predicates, and the only difference between them is which NOT EXISTS predicate is specified first:

update statistics #CustTeenyRowSize with fullscan

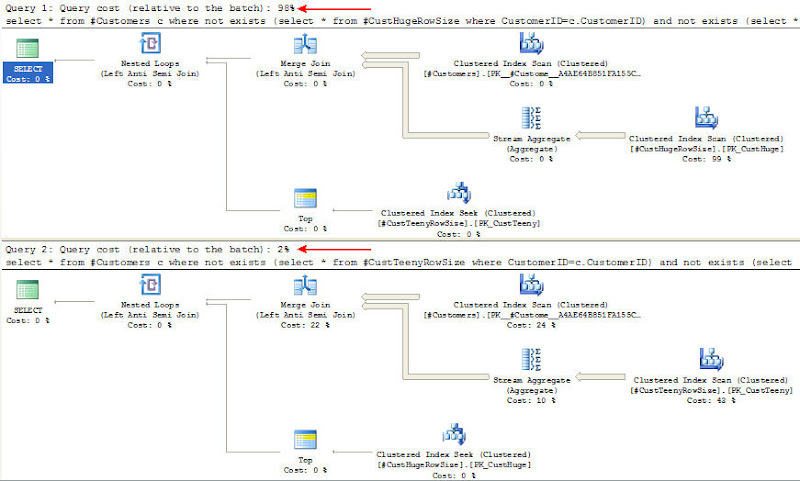

select *These two plans certainly produce the same result; however, as you can see in comparing their execution plans, the behavior (and performance) of the two queries is vastly different (click on the picture to see an enlarged image):

from #Customers c

where not exists (select * from #CustHugeRowSize where CustomerID=c.CustomerID)

and not exists (select * from #CustTeenyRowSize where CustomerID=c.CustomerID)

/*

CustomerID Name

---------- ---------------------

98765 Customer Number 98765

*/

select *

from #Customers c

where not exists (select * from #CustTeenyRowSize where CustomerID=c.CustomerID)

and not exists (select * from #CustHugeRowSize where CustomerID=c.CustomerID)

/*

CustomerID Name

---------- ---------------------

98765 Customer Number 98765

*/

Did you see that comparison in cost? It’s a whopping 98% compared to 2%! And, sure enough, when you compare the two queries in the Profiler, you get these statistics:

/*

Description CPU Reads Duration

------------------------------------

Query #1 1265ms 151464 28509ms

Query #2 161ms 1160 447ms

*/

Wow! Why the huge difference? As I’ve mentioned in a previous blog post, when we look under the hood, we can see that a NOT EXISTS predicate is translated into a LEFT ANTI SEMI JOIN, which is a LEFT JOIN that only outputs rows from the LEFT side (SEMI) if there are no matching rows (ANTI) in the right side.

In the first query, a MERGE JOIN is used between the Customers table and the CustHugeRowSize table to do the LEFT ANTI SEMI JOIN to find the rows in Customers that do NOT EXIST in the CustHugeRowSize table. That means the engine must SCAN both tables (or, more accurately, SCAN the clustered indexes of both tables). It takes a while to SCAN the entire CustHugeRowSize table, because it consists of 299,997 rows, each of which are over 3500 bytes in size… only two rows can fit on a 8192-byte page. So, the engine has to scan through 299997 / 2 = 149,999 (leaf) pages, which is over a gigabyte of data.

In the second query, on the other hand, the MERGE JOIN is done between the Customers table and the CustTeenyRowSize table. Even though the CustTeenyRowSize table also has 299,997 rows, it takes no time at all to SCAN it, because its row size is only 16 bytes, so over 500 rows can fit on a single data page, and so the engine only has to scan through approximately 299997 / 500 = 600 pages = 4.8 megabytes. That’s 250 times smaller than the CustHugeRowSize table.

Well, that’s very interesting, and it explains why the first query is so much slower, but why in the heck would the order of NOT EXISTS predicates, and therefore, the order of the LEFT JOINs, make a difference?

The answer is: “I don’t know”… it shouldn’t make any difference… especially when the ON condition of the multiple JOINs refer solely back to the table in the FROM clause and not to each other.

For example, take a look at the following 3 queries. They each have the same 3 LEFT JOINs, specified in different orders, with each JOIN referring back to the FROM table in the ON condition and not referring to each other at all:

select c.CustomerIDThe queries are functionally the same… they produce the same result… however, each of the queries produces a completely different query plan! In each case, the FROM table and the first specified LEFT JOIN table are always processed together in a Merge Join. And the costs of the three queries are different as well: 35% : 33% : 31%. And, running them in Profiler shows that the first query is, in fact, slowest and the third query is fastest:

from Sales.Customer c

left join Sales.StoreContact sc on c.CustomerID=sc.CustomerID

left join Sales.SalesOrderHeader soh on c.CustomerID=soh.CustomerID

left join Sales.CustomerAddress ca on c.CustomerID=ca.CustomerID

select c.CustomerID

from Sales.Customer c

left join Sales.SalesOrderHeader soh on c.CustomerID=soh.CustomerID

left join Sales.StoreContact sc on c.CustomerID=sc.CustomerID

left join Sales.CustomerAddress ca on c.CustomerID=ca.CustomerID

select c.CustomerID

from Sales.Customer c

left join Sales.CustomerAddress ca on c.CustomerID=ca.CustomerID

left join Sales.SalesOrderHeader soh on c.CustomerID=soh.CustomerID

left join Sales.StoreContact sc on c.CustomerID=sc.CustomerID

/*

Description CPU Reads Duration

------------------------------------

Query #1 126ms 256 421ms

Query #2 114ms 253 351ms

Query #3 95ms 296 291ms

*/

It seems to me that the optimizer should figure out which is the best approach and choose the most cost-effective plan possible… in other words, it should ignore the order of my multiple outer joins and come up with the best, most cost-effective plan (i.e. the same plan) for each of those three queries. I’ve inquired about this behavior with Microsoft but have not yet heard anything. Conor Cunningham did respond publicly in his blog last week, but, unless I misunderstood what he wrote, he indicates that the order of LEFT JOINs does not matter, which is the exact opposite of what I demonstrated above.

So, for the time being, it seems to me that if you have multiple NOT EXISTS predicates in your WHERE clause, or if you have multiple OUTER JOINs in a query, you may want to tinker with their order to make sure you come up with the most effective execution plan possible.

Thanx Brad. I was hoping Conner would respond to your question. (And mine... unless you want to pick it up :) )

ReplyDeleteThat note about ISNULL is excellent. I use ISNULL for clarity. But now i have another reason.

In the example above, does it make sense to wrap the entire query inside another query that does the COALESCE?

Thanks for the comment, Brian.

ReplyDeleteI tend to prefer COALESCE()... not only because it's a cool word... but because it is ANSI standard, and it accepts multiple arguments, and it doesn't have the quirk that ISNULL() has with forcing the result to be the exact datatype (and length) of the first argument. However, ISNULL is certainly preferable when it comes to scalar subqueries.

Regarding your COALESCE question... I'm not sure I follow what you're asking... but anytime you have a subquery of some kind as the first argument of COALESCE, you'll get the repeating behavior.

Regarding your question on Conor's blog... As far as I know, it won't make any difference whatsoever. Since the first predicate in your WHERE clause establishes that a.id=b.id, then it won't matter if the second join condition is a.id=c.id or b.id=c.id... they are equivalent (i.e. it's just mathematical substitution).

For the COALESCE, i was thinking you could wrap the entire query up as a CTE, and use COALESCE when SELECTing the value out. Wondering if that would save the doubled query/

ReplyDelete@Brian:

ReplyDeleteI see what you're saying now... I tried it and it works... only one pass. Good alternative! Thanks!

WITH cte AS

(

SELECT ..., QtyRunTot=OrderQty+(subquery)

FROM Sales.SalesOrderDetail sod

)

SELECT ..., QtyRunTot=COALESCE(QtyRunTot,OrderQty)

FROM cte

Cool stuff Brad.

ReplyDeleteSometimes i wonder what exactly the CTE hides.

Hmm.... If a CTE can make it one pass, howsabout CROSS APPLY? :)

Maybe i should test this stuff myself...

You're on a roll, Brian!

ReplyDeleteYes, CROSS APPLY will work also... only one pass:

SELECT ..., QtyRunTot=OrderQty+COALESCE(PrevRunTot,0)

FROM Sales.SalesOrderDetail sod

CROSS APPLY (SELECT PrevRunTot=SUM(OrderQty) ... ) F1

I think I'll have to update the blog with these suggestions... Thanks!

Of course, you know that by suggesting these, it's putting more nails into ISNULL's grave, don't you? 8^)

Oops... I meant "coffin", not "grave".

ReplyDeleteHeh. I'm not exactly very big on ANSI standards. inner/outer joins really make me wonder if they have any brains. It looks nice, but it's just plain wrong.

ReplyDeleteI'm all for using specific FUNCTIONs. SQL Server's ISNULL(), Oracle's NVL() (and NVL2()), or whatever else they have. It makes sense because each DB has it's own implementation flavor, and the available FUNCTIONs are a part of that.

Some standardization is nice. Such as the structure and various clauses of the statement. Past that, i'm not sure i care.

Very interesting article!

ReplyDeleteI do find the LEFT ordering implications concerning.

Hey Brad,

ReplyDeleteI seem to recall that EXISTS is never re-ordered for backward-compatibility reasons: older versions of SQL always evaluated (anti) semi joins in textual order and some code out there relies on it.

This is a vague recollection - I'm still searching for a reference to confirm that.

I would expect the non-re-ordering to extend to EXCEPT and INTERSECT for similar reasons.

Single-sided outer joins have very complex re-write rules, I'm not sure whether the current optimiser can re-order them - even for hierarchical structures - though Conor should know, I guess :)

It will be interesting to see how Conor responds on this one. Fascinating blog post!

Paul

@Paul:

ReplyDeleteThanks for the comment. What you say is interesting regarding the backward compatibility.

I agree about complex rewrites of OUTER JOINs, but the examples I gave are the simplest of OUTER JOINs in that they refer ONLY to the FROM table and are not interconnected, so the order that they are processed should not matter and the optimizer should recognize that and choose the "best" (i.e. cheaper) approach.

I'm still waiting for word from Microsoft on the whole issue. I don't want to bother Conor directly... I know he's busy, though I'm surprised he hasn't said anything at all on his blog... but some friends and I are "working on" MSFT to get an answer to this. We've been hounding them for a couple weeks now. 8^)

Once we find out something, I'll certainly pass it on.

--Brad

Is there any kind of caching that could influence execution time of the three queries?

ReplyDeleteCan the order in which queries are executed influence the execution time?

(like some pages are still in CPUs cache, in HDD cache, in RAM, the DB's cache, or something like that)

Hi Marcin...

DeleteFrom what I remember, I ran each query individually and I cleared out the buffer and plan cache before each test (DBCC DROPCLEANBUFFERS and DBCC FREEPROCCACHE). So no test influenced any other in terms of cached data.

--Brad

good:)

ReplyDelete