This blog entry is participating in T-SQL Tuesday #016, hosted this month by Jes Schultz Borland (also known as grrlgeek). And no, we are not related, because you can plainly see that she puts an extraneous “t” in Schulz. (Sorry, Jes, I couldn’t resist).

This blog entry is participating in T-SQL Tuesday #016, hosted this month by Jes Schultz Borland (also known as grrlgeek). And no, we are not related, because you can plainly see that she puts an extraneous “t” in Schulz. (Sorry, Jes, I couldn’t resist).You are invited to visit her blog and join the party and read more blogs participating in this month’s theme: Aggregates.

In May and June of 2010, I had the pleasure of giving a talk on Window Functions to the San Francisco and Silicon Valley SQL Server User Groups. I’ve been meaning to put together a blog post or two consisting of highlights of those presentations, but I just never got around to it.

Jes’ T-SQL Tuesday invitation was the perfect catalyst… And so this blog post will be specifically about Aggregate Window Functions (AWF) and some tips and tricks and traps.

Who Am I? Where Do I Fit In?

Consider the following query, which gives a detailed list of all the orders in AdventureWorks. The result is sorted by CustomerID and SalesOrderID for our convenience:

select CustomerIDTake a look at the first row in this result set… Order Number 43860. This row has an identity complex.

,SalesOrderID

,SalesPersonID

,OrderDate=convert(date,OrderDate)

,OrderDollars=TotalDue

from AdventureWorks.Sales.SalesOrderHeader

order by CustomerID

,SalesOrderID

/*

CustomerID SalesOrderID SalesPersonID OrderDate OrderDollars

---------- ------------ ------------- ---------- ------------

1 43860 280 2001-08-01 14603.7393

1 44501 280 2001-11-01 26128.8674

1 45283 280 2002-02-01 37643.1378

1 46042 280 2002-05-01 34722.9906

2 46976 283 2002-08-01 10184.0774

2 47997 283 2002-11-01 5469.5941

. . .

(31465 Rows Total)

*/

Sure, it knows that it’s an order for CustomerID 1, and it was handled by SalesPersonID 280, and it was ordered in the month of August 2001, and it was worth about $14600.

But what does all that really mean? The row is wondering: “What is my place in the world? How do I stack up against other rows? Who am I? I know that I belong to CustomerID 1, but am I that customer’s only order? Or am I one of many? What about my order amount of $14600? Am I a small order for that customer? Or am I huge? What percentage of CustomerID 1’s orders is attributable to me? What about my SalesPerson? Is my order amount above or below average for that SalesPerson? What about compared to ALL orders in AdventureWorks?”

This row has a lot of questions about the world outside of its little 1-row universe.

Since we sorted the result by CustomerID, it’s easy to see from our perspective the partition of rows for CustomerID number 1. And we can see quickly that Order 43860 is just 1 of 4 orders for this Customer, and, if we’re quick with mental calculations, we can see that the average dollars for that Customer’s orders is around $28000, so that makes Order 43860 below average.

We’ve always had the ability to analyze partitions (or groups) of data with the GROUP BY clause, like so:

select CustomerIDAnd we can also get information on the large single partition of ALL orders:

,NumOrders=count(*)

,TotalDollars=sum(TotalDue)

,AverageDollars=avg(TotalDue)

from AdventureWorks.Sales.SalesOrderHeader

group by CustomerID

order by CustomerID

/*

CustomerID NumOrders TotalDollars AverageDollars

---------- --------- ------------ --------------

1 4 113098.7351 28274.6837

2 8 32733.9695 4091.7461

3 12 479506.3256 39958.8604

4 8 780035.2121 97504.4015

5 8 114218.8006 14277.35

. . .

(19119 Rows Total)

*/

select NumOrders=count(*)But in getting that summary information, we lose the detail.

,TotalDollars=sum(TotalDue)

,AverageDollars=avg(TotalDue)

from AdventureWorks.Sales.SalesOrderHeader

/*

NumOrders TotalDollars AverageDollars

--------- -------------- --------------

31465 140707584.8246 4471.8762

*/

The Window Functions introduced in SQL2005 allow us to “marry” the detail and the summary information… and we also are not limited to partitioning the data in only one way.

There are Ranking Window Functions like ROW_NUMBER, RANK, DENSE_RANK, and NTILE, but I want to focus instead on Aggregate Window Functions, like SUM, COUNT, AVG, MIN, MAX, etc.

What differentiates a regular Aggregate from a Windowed Aggregate is the OVER clause, where we can specify how we want to partition the data:

Aggregate_Function( <parameter> ) OVER ( [PARTITION BY <partition_list>] )The OVER clause defines how we want to partition the data into groups of rows, and the Aggregate Function is applied to each of those groups, returning a value for that group.

Note that the PARTITION BY clause is optional, but if it is left out, then it is implied that the aggregate is applied to the partition of the entire result set.

So, with that in mind, let’s look at our original query once again, but this time, through Aggregate Window Functions, let’s answer some of Order 43860’s questions. How many order does its customer have? What percent of the customer’s orders is its value responsible for? Is its value above or below average for the customer? What about for the salesperson? What about the entire company?

select CstID=CustomerIDThis is great! Now Order 43860 knows so much more about itself and how it fits into the world, and we have relieved it of the burden of expensive therapy sessions.

,OrdID=SalesOrderID

,SPsnID=SalesPersonID

,Dollars=TotalDue

,[#CstOrds]=count(*) over (partition by CustomerID)

,[%CustTot]=TotalDue / sum(TotalDue) over (partition by CustomerID) * 100

,[+/-CustAvg]=TotalDue - avg(TotalDue) over (partition by CustomerID)

,[+/-SlsPsnAvg]=TotalDue - avg(TotalDue) over (partition by SalesPersonID)

,[+/-OverallAvg]=TotalDue - avg(TotalDue) over ()

from AdventureWorks.Sales.SalesOrderHeader

order by CustomerID, SalesOrderID

/*

CstID OrdID SPsnID Dollars #CstOrds %CustTot +/-CustAvg +/-SlsPsnAvg +/-OverallAvg

----- ----- ------ ---------- -------- -------- ----------- ------------ -------------

1 43860 280 14603.7393 4 12.91 -13670.9444 -32270.8068 10131.8631

1 44501 280 26128.8674 4 23.10 -2145.8163 -20745.6787 21656.9912

1 45283 280 37643.1378 4 33.28 9368.4541 -9231.4083 33171.2616

1 46042 280 34722.9906 4 30.70 6448.3069 -12151.5555 30251.1144

2 46976 283 10184.0774 8 31.11 6092.3313 -16428.8692 5712.2012

2 47997 283 5469.5941 8 16.70 1377.848 -21143.3525 997.7179

2 49054 283 1739.4078 8 5.31 -2352.3383 -24873.5388 -2732.4684

. . .

(31465 Rows Total)

*/

What is a Result Set?

It’s important to know, however, that these Window Functions are applied to the Result Set, and not to the original source data (i.e. the tables) of the query.

For example, if we add a WHERE clause to that last query to only look at orders placed in the year 2001, then that completely changes the Result Set…

select CstID=CustomerID…And we see that Order 43860’s perspective completely changes. Now he’s only one of 2 orders of CustomerID 1, and he now makes up a full 35.85% of that customer’s order total (as opposed to only 12.91%). And he’s only $4000 above the overall average of all orders in AdventureWorks (as opposed to $10000 above average).

,OrdID=SalesOrderID

,SPsnID=SalesPersonID

,Dollars=TotalDue

,[#CstOrds]=count(*) over (partition by CustomerID)

,[%CustTot]=TotalDue / sum(TotalDue) over (partition by CustomerID) * 100

,[+/-CustAvg]=TotalDue - avg(TotalDue) over (partition by CustomerID)

,[+/-SlsPsnAvg]=TotalDue - avg(TotalDue) over (partition by SalesPersonID)

,[+/-OverallAvg]=TotalDue - avg(TotalDue) over ()

from AdventureWorks.Sales.SalesOrderHeader

where OrderDate>='20010101' and OrderDate<'20020101'

order by CustomerID, SalesOrderID

/*

CstID OrdID SPsnID Dollars #CstOrds %CustTot +/-CustAvg +/-SlsPsnAvg +/-OverallAvg

----- ----- ------ ---------- -------- -------- ----------- ------------ -------------

1 43860 280 14603.7393 2 35.85 -5762.564 -25709.6621 4213.9263

1 44501 280 26128.8674 2 64.14 5762.5641 -14184.534 15739.0544

3 44124 277 20504.1755 2 51.57 627.7545 -9620.1415 10114.3625

3 44791 277 19248.6666 2 48.42 -627.7544 -10875.6504 8858.8536

11 43853 282 34946.6266 2 86.60 14771.4029 7888.1527 24556.8136

11 44495 282 5403.8208 2 13.39 -14771.4029 -21654.6531 -4985.9922

. . .

(1379 Rows Total)

*/

Because Window Functions are applied to partitions of the Result Set, it’s important to understand the logical processing order of a query so that we really know what a “Result Set” is.

In simplest terms, the logical processing order of a query’s clauses is like so:

/*

FROM and JOIN/APPLY/PIVOT/UNPIVOT

WHERE

GROUP BY

HAVING

(At this point we have a Result Set)

SELECT

ORDER BY

TOP

*/

So Window Functions only come into play after we’ve done the FROM and JOINs and WHEREs and the GROUPing and HAVING stuff. And this is why the Window Functions can only be used in the SELECT or ORDER BY clauses of a query.

What About the WHERE Clause?

You may NOT use Window Functions in a WHERE clause.

For example, let’s consider orders placed in January of 2004… Which orders had an above-average dollar amount (for that time period)? The following query, which employs a subquery to get the average for the month, will work fine:

select SalesOrderIDBut don’t you just hate having to duplicate the WHERE clause in the subquery? One might be tempted to use Window Function to get the average of the result set and put it in the WHERE clause like so:

,CustomerID

,OrderDate=convert(date,OrderDate)

,OrderDollars=TotalDue

from AdventureWorks.Sales.SalesOrderHeader

where OrderDate>='20040101' and OrderDate<'20040201'

and TotalDue>(select avg(TotalDue)

from AdventureWorks.Sales.SalesOrderHeader

where OrderDate>='20040101' and OrderDate<'20040201')

order by SalesOrderID

/*

SalesOrderID CustomerID OrderDate OrderDollars

------------ ----------- ---------- ---------------------

61173 676 2004-01-01 38511.2882

61174 622 2004-01-01 9128.8743

61175 34 2004-01-01 34218.425

61177 442 2004-01-01 28287.8223

61178 510 2004-01-01 3565.8474

. . .

(498 Rows Total)

*/

select SalesOrderIDWell, that error message makes things clear, doesn’t it? We simply can’t do it that way. But how do we accomplish our goal? We’d have to use a CTE that included the Window Function, and then in the query that uses the CTE, we can now filter out what we want:

,CustomerID

,OrderDate=convert(date,OrderDate)

,OrderDollars=TotalDue

from AdventureWorks.Sales.SalesOrderHeader

where OrderDate>='20040101' and OrderDate<'20040201'

and TotalDue>avg(TotalDue) over ()

order by SalesOrderID

/*

Msg 4108, Level 15, State 1, Line 7

Windowed functions can only appear in the SELECT or ORDER BY clauses.

*/

with SourceData asThere, that’s better.

(

select SalesOrderID

,CustomerID

,OrderDate=convert(date,OrderDate)

,OrderDollars=TotalDue

,OverallAverageDollars=avg(TotalDue) over ()

from AdventureWorks.Sales.SalesOrderHeader

where OrderDate>='20040101' and OrderDate<'20040201'

)

select SalesOrderID

,CustomerID

,OrderDate

,OrderDollars

from SourceData

where OrderDollars>OverallAverageDollars

order by SalesOrderID

/*

SalesOrderID CustomerID OrderDate OrderDollars

------------ ----------- ---------- ------------

61173 676 2004-01-01 38511.2882

61174 622 2004-01-01 9128.8743

61175 34 2004-01-01 34218.425

61177 442 2004-01-01 28287.8223

61178 510 2004-01-01 3565.8474

. . .

(498 Rows Total)

*/

The GROUP BY Puzzle

This knowledge of when the Window Functions come into play is especially important when doing a GROUP BY query.

Let’s take a look at quantities sold by Region/Territory:

select Region=t.CountryRegionCodeWe get a request to show how each territory contributes (percentage-wise) to the grand total. We might be tempted to do that by using the SUM Aggregate Window Function to calculate the grand total of the entire result set and divide each row’s total by that grand total to get a percentage.

,Territory=t.Name

,OrderTotal=sum(OrderQty)

from AdventureWorks.Sales.SalesOrderDetail d

join AdventureWorks.Sales.SalesOrderHeader h on d.SalesOrderID=h.SalesOrderID

join AdventureWorks.Sales.SalesTerritory t on h.TerritoryID=t.TerritoryID

group by t.CountryRegionCode

,t.Name

order by Region

,Territory

/*

Region Territory OrderTotal

------ -------------- ----------

AU Australia 18293

CA Canada 49381

DE Germany 13143

FR France 19906

GB United Kingdom 20099

US Central 19493

US Northeast 19843

US Northwest 36776

US Southeast 18875

US Southwest 59105

*/

select Region=t.CountryRegionCodeHuh? What does that error message mean? OrderQty is not in the SELECT list… it’s part of an aggregate. Why does the optimizer think it’s in the SELECT list?

,Territory=t.Name

,OrderTotal=sum(OrderQty)

,PercentOfTotal=100.0 * sum(OrderQty) / sum(OrderQty) over ()

from AdventureWorks.Sales.SalesOrderDetail d

join AdventureWorks.Sales.SalesOrderHeader h on d.SalesOrderID=h.SalesOrderID

join AdventureWorks.Sales.SalesTerritory t on h.TerritoryID=t.TerritoryID

group by t.CountryRegionCode

,t.Name

order by Region

,Territory

/*

Msg 8120, Level 16, State 1, Line 4

Column 'AdventureWorks.Sales.SalesOrderDetail.OrderQty' is invalid in the select list

because it is not contained in either an aggregate function or the GROUP BY clause.

*/

Let’s take a step back for a moment. What was the result set of our original query? It was 3 columns: Region, Territory, and OrderTotal. That OrderTotal column was simply SUM(OrderQty). So then what would the Grand Total of the entire result set be? Ah-hah! It would be the SUM of all the SUM(OrderQty)’s. So that’s what we have to use in our Aggregate Window Function:

select Region=t.CountryRegionCodeSo, in short, we can’t apply an Aggregate Window Function to the expression OrderQty, because by the time that the GROUP BY does its job, the OrderQty column is not available anymore… It is not in the Result Set. However, SUM(OrderQty) was in our Result Set, so we can apply our SUM function to that expression… thus, SUM(SUM(OrderQty)).

,Territory=t.Name

,OrderTotal=sum(OrderQty)

,PercentOfTotal=100.0 * sum(OrderQty) / sum(sum(OrderQty)) over ()

from AdventureWorks.Sales.SalesOrderDetail d

join AdventureWorks.Sales.SalesOrderHeader h on d.SalesOrderID=h.SalesOrderID

join AdventureWorks.Sales.SalesTerritory t on h.TerritoryID=t.TerritoryID

group by t.CountryRegionCode

,t.Name

order by Region

,Territory

/*

Region Territory OrderTotal PercentOfTotal

------ -------------- ---------- ---------------

AU Australia 18293 6.654080912576

CA Canada 49381 17.962344587761

DE Germany 13143 4.780767803749

FR France 19906 7.240809853263

GB United Kingdom 20099 7.311013626079

US Central 19493 7.090581054438

US Northeast 19843 7.217893595815

US Northwest 36776 13.377274347614

US Southeast 18875 6.865783481379

US Southwest 59105 21.499450737321

*/

ORDER BY Uses

One final word on Aggregate Window Functions: Using an Aggregate Window Function in the SELECT clause makes sense. But how the heck would you use one in an ORDER BY?

Here’s an interesting example of how that might be useful. Let’s take a look at the top 100 orders (in terms of dollars). Let’s see which SalesPersons are associated with those orders:

select top 100 SalesPersonIDThat gives us the detail about those Top 100 Orders. But it doesn’t give us any kind of perspective. We can see that Jauna Elson had the two biggest orders in the company, but does she have any others? What if we sort the result so that the SalesPerson who has the most orders is at the top of the result, and the SalesPerson with the second most orders is next, and so on? All we have to do is ORDER BY the COUNT of orders of each partition of SalesPersonID, like so:

,FirstName

,LastName

,SalesOrderID

,OrderDate=convert(date,OrderDate)

,TotalDue

from AdventureWorks.Sales.SalesOrderHeader soh

join AdventureWorks.Person.Contact c on soh.SalesPersonID=c.ContactID

order by TotalDue desc

/*

SalesPersonID FirstName LastName SalesOrderID OrderDate TotalDue

------------- --------- -------- ------------ ---------- -----------

281 Jauna Elson 51131 2003-07-01 247913.9138

281 Jauna Elson 55282 2003-10-01 227737.7215

285 Gail Erickson 46616 2002-07-01 207058.3754

286 Mark Erickson 46981 2002-08-01 201490.4144

275 Maciej Dusza 47395 2002-09-01 198628.3054

. . .

286 Mark Erickson 51735 2003-08-01 123218.8124

286 Mark Erickson 53497 2003-09-01 122462.2807

275 Maciej Dusza 51153 2003-07-01 122035.2702

281 Jauna Elson 44127 2001-09-01 121141.6984

285 Gail Erickson 46959 2002-08-01 120843.8322

(100 Rows Total)

*/

select top 100 SalesPersonIDIsn’t that cool? We see that Linda Ecoffey has the most orders, so she’s at the top, and at the bottom of the list, we see---

,FirstName

,LastName

,SalesOrderID

,OrderDate=convert(date,OrderDate)

,TotalDue

from AdventureWorks.Sales.SalesOrderHeader soh

join AdventureWorks.Person.Contact c on soh.SalesPersonID=c.ContactID

order by count(*) over (partition by SalesPersonID) desc

/*

SalesPersonID FirstName LastName SalesOrderID OrderDate TotalDue

------------- --------- -------- ------------ ---------- ----------

277 Linda Ecoffey 71894 2004-06-01 92952.5948

277 Linda Ecoffey 71908 2004-06-01 2791.3805

277 Linda Ecoffey 71939 2004-06-01 972.785

277 Linda Ecoffey 71940 2004-06-01 668.3798

277 Linda Ecoffey 71929 2004-06-01 66.2255

. . .

277 Linda Ecoffey 63274 2004-02-01 486.3925

277 Linda Ecoffey 63286 2004-02-01 449.506

277 Linda Ecoffey 63221 2004-02-01 50.2703

277 Linda Ecoffey 63226 2004-02-01 50.2703

277 Linda Ecoffey 63232 2004-02-01 102.1309

(100 Rows Total)

*/

What a minute…

Something’s wrong here. Linda Ecoffey is the only one in the report. Where’s Jauna Elson? Why are piddly little $50 orders showing up in our query?

Any idea?

It’s the Logical Processing Order of the query coming into play again! What is the very last thing that happens in a query? The TOP operator! Our COUNT(*) Window Function was applied to CustomerID partitions of ALL orders… and THEN the TOP operator was applied.

So once again, we have to split the query up and get the Top 100 Orders in a CTE, and THEN use the ORDER BY in the main query that uses that CTE:

with Top100Sales asSo that’s more like it. Linda Ecoffey is the top sales person in terms of having the most orders of the Top 100. Then comes Shelley Dyck and her orders (not all listed). And at the bottom of the list is Gary Drury with only one order in the Top 100.

(

select top 100 SalesPersonID

,FirstName

,LastName

,SalesOrderID

,OrderDate=convert(date,OrderDate)

,TotalDue

from AdventureWorks.Sales.SalesOrderHeader soh

join AdventureWorks.Person.Contact c on soh.SalesPersonID=c.ContactID

order by TotalDue desc

)

select *

from Top100Sales

order by count(*) over (partition by SalesPersonID) desc

/*

SalesPersonID FirstName LastName SalesOrderID OrderDate TotalDue

------------- --------- --------- ------------ ---------- -----------

277 Linda Ecoffey 43884 2001-08-01 154912.0712

277 Linda Ecoffey 44528 2001-11-01 144355.8792

277 Linda Ecoffey 46660 2002-07-01 162629.7468

277 Linda Ecoffey 53506 2003-09-01 142587.761

277 Linda Ecoffey 47027 2002-08-01 141111.7487

277 Linda Ecoffey 50683 2003-06-01 140349.1998

277 Linda Ecoffey 44530 2001-11-01 139414.5241

277 Linda Ecoffey 46067 2002-05-01 135306.5844

277 Linda Ecoffey 49888 2003-04-01 128123.3556

277 Linda Ecoffey 47658 2002-10-01 132199.8023

277 Linda Ecoffey 46642 2002-07-01 130825.509

277 Linda Ecoffey 46066 2002-05-01 133271.8758

277 Linda Ecoffey 47712 2002-10-01 133229.5585

277 Linda Ecoffey 47990 2002-11-01 133056.7092

277 Linda Ecoffey 46629 2002-07-01 132987.326

277 Linda Ecoffey 48048 2002-11-01 126309.4039

276 Shelley Dyck 50304 2003-05-01 123326.9867

276 Shelley Dyck 69471 2004-05-01 131279.032

276 Shelley Dyck 44777 2001-12-01 132235.1616

. . .

283 Terry Eminhizer 51123 2003-07-01 125068.34

283 Terry Eminhizer 46643 2002-07-01 149897.3647

283 Terry Eminhizer 51711 2003-08-01 136046.4425

287 Martha Espinoza 50297 2003-05-01 137108.3865

287 Martha Espinoza 69508 2004-05-01 140734.4875

289 Janeth Esteves 55254 2003-10-01 140521.5049

289 Janeth Esteves 51761 2003-08-01 146293.234

280 Shannon Elliott 47033 2002-08-01 125254.4859

280 Shannon Elliott 67297 2004-04-01 125144.0084

268 Gary Drury 51830 2003-08-01 149861.0659

(100 Rows Total)

*/

So this is a novel way of looking at a query result and seeing at a glance that Linda is our big gun and she really leaves Gary in the dust.

But is this fair? Maybe the quantity of orders is not a good representation of who is our big gun. Perhaps finding out who brings in the highest average dollar amount of orders should be put at the top. Easy enough… We’ll just change our ORDER BY clause:

with Top100Sales asSo Jauna is the champion in terms of highest-dollar average orders, and our friend Gary Drury, who ranked dead last in the previous query, redeems himself in this one because, although he only has one order, it is for a large amount, making him the second highest SalesPerson in terms of average dollars. Linda Ecoffey comes out towards the bottom.

(

select top 100 SalesPersonID

,FirstName

,LastName

,SalesOrderID

,OrderDate=convert(date,OrderDate)

,TotalDue

from AdventureWorks.Sales.SalesOrderHeader soh

join AdventureWorks.Person.Contact c on soh.SalesPersonID=c.ContactID

order by TotalDue desc

)

select *

from Top100Sales

order by avg(TotalDue) over (partition by SalesPersonID) desc

/*

SalesPersonID FirstName LastName SalesOrderID OrderDate TotalDue

------------- --------- --------- ------------ ---------- -----------

281 Jauna Elson 44762 2001-12-01 123265.0706

281 Jauna Elson 61184 2004-01-01 125364.2684

281 Jauna Elson 50252 2003-05-01 126565.2584

281 Jauna Elson 44127 2001-09-01 121141.6984

281 Jauna Elson 47441 2002-09-01 153055.214

281 Jauna Elson 47018 2002-08-01 144864.8759

281 Jauna Elson 47369 2002-09-01 189198.6156

281 Jauna Elson 51131 2003-07-01 247913.9138

281 Jauna Elson 55282 2003-10-01 227737.7215

281 Jauna Elson 67305 2004-04-01 155260.0958

281 Jauna Elson 48043 2002-11-01 129398.8662

281 Jauna Elson 44795 2001-12-01 139562.6866

268 Gary Drury 51830 2003-08-01 149861.0659

286 Mark Erickson 57143 2003-11-01 126349.7887

286 Mark Erickson 50222 2003-05-01 124696.565

286 Mark Erickson 51735 2003-08-01 123218.8124

. . .

277 Linda Ecoffey 47990 2002-11-01 133056.7092

277 Linda Ecoffey 46629 2002-07-01 132987.326

277 Linda Ecoffey 48048 2002-11-01 126309.4039

283 Terry Eminhizer 51123 2003-07-01 125068.34

283 Terry Eminhizer 46643 2002-07-01 149897.3647

283 Terry Eminhizer 51711 2003-08-01 136046.4425

280 Shannon Elliott 47033 2002-08-01 125254.4859

280 Shannon Elliott 67297 2004-04-01 125144.0084

(100 Rows Total)

*/

In Conclusion

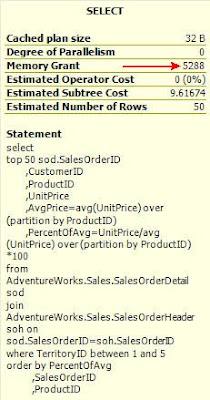

I hope this article gave you some insight into Aggregate Window Functions (AWF)… If you can’t get enough of this stuff, please also read a blog post I wrote last year called OVER Exposed, in which I talk in more detail about AWF’s and more gotchas and what really goes on under the hood with them.

Now go out there and produce some AWF-Inspiring Queries!