First of all, the database has impossible-to-decipher 5-character table names, and their column names are just as bad, so I’m dealing with objects with awful names that look like XQUMB.XUMHF and YRWNO.YWNVZ.

Second of all, every table in the database is a Heap with no primary keys or indexes defined at all.

Great, huh?

But wait… that’s not all!

There are no small simple surrogate keys to logically tie the tables together in any kind of a relationship. Many of the tables have a logical key made up of multiple columns. So when I look at the stored procedures that JOIN the tables together, I see ugly stuff like this:

select X.XUMHFDoesn’t that look like fun?

,X.XUMRO

,X.XUMBC

,X.XUMPU

,Y.YWNOQ

,B.BFUCP

,B.BFUVX

from XQUMB as X

join YRWNO as Y on X.XUMHF=Y.YWNHF

and X.XUMRO=Y.YWNRO

and X.XUMBC=Y.YWNBC

join BRFUP as B on Y.YWNHF=B.BFUHF

and Y.YWNRO=B.BFURO

and Y.YWNBC=B.BFUBC

and Y.YWNCP=B.BFUCP

None of the tables are really that large in terms of rows, so I figured performance would not be that big of a deal.

WRONG!

Bad assumption.

I’ve been so spoiled by only dealing with databases with well-defined keys and indexes that I never realized what a heap o’ trouble Heaps can be… especially when it comes to multi-column JOINs.

Let me illustrate with our old friend the AdventureWorks database.

Here’s a simple JOIN of 4 tables in the original database (with appropriate keys and indexes):

select p.ProductID as PIDSSMS will spit that sorted result of 121,317 rows out in under 3 seconds.

,p.Name

,d.OrderQty

,h.SalesOrderNumber

,h.OrderDate

,c.TerritoryID

from AdventureWorks.Production.Product p

join AdventureWorks.Sales.SalesOrderDetail d on p.ProductID=d.ProductID

join AdventureWorks.Sales.SalesOrderHeader h on d.SalesOrderID=h.SalesOrderID

join AdventureWorks.Sales.Customer c on h.CustomerID=c.CustomerID

order by p.ProductID

/*

PID Name OrderQty SalesOrderNumber OrderDate TerritoryID

--- --------------------- -------- ---------------- ---------- -----------

707 Sport-100 Helmet, Red 1 SO43665 2001-07-01 1

707 Sport-100 Helmet, Red 2 SO43668 2001-07-01 6

707 Sport-100 Helmet, Red 4 SO43673 2001-07-01 2

. . .

999 Road-750 Black, 52 1 SO73926 2004-06-27 4

999 Road-750 Black, 52 1 SO74094 2004-06-29 9

999 Road-750 Black, 52 1 SO74143 2004-06-30 10

(121317 rows total in 2.7 seconds)

*/

Now let’s create some Heaps out of those 4 tables. And we will change the Products table so that its logical key is in 3 parts… instead of ProductID, we will have a multiple-column logical key of ProductMainID, ProductSubID, and ProductSubSubID. And, of course, we’ll have those same 3 columns in SalesOrderDetail to act as a (logical) foreign key reference into the Products table:

select ProductID as ProductMainIDNow let’s do our query again using those Heaps, but of course, we have to use multiple columns to JOIN the #Prods and #OrdDetail tables:

,ProductID as ProductSubID

,ProductID as ProductSubSubID

,Name

into #Prods

from AdventureWorks.Production.Product

select SalesOrderID

,OrderDate

,SalesOrderNumber

,CustomerID

into #OrdHeader

from AdventureWorks.Sales.SalesOrderHeader

select SalesOrderID

,OrderQty

,LineTotal

,ProductID as ProductMainID

,ProductID as ProductSubID

,ProductID as ProductSubSubID

into #OrdDetail

from AdventureWorks.Sales.SalesOrderDetail

select CustomerID

,TerritoryID

,CustomerType

into #Custs

from AdventureWorks.Sales.Customer

select p.ProductMainID as PIDWell, that’s not that bad. Instead of 2.7 seconds, it took 5.7 seconds. It’s not the end of the world. In fact, it kind of surprises me that---

,p.Name

,d.OrderQty

,h.SalesOrderNumber

,h.OrderDate

,c.TerritoryID

from #Prods p

join #OrdDetail d on p.ProductMainID=d.ProductMainID

and p.ProductSubID=d.ProductSubID

and p.ProductSubSubID=d.ProductSubSubID

join #OrdHeader h on d.SalesOrderID=h.SalesOrderID

join #Custs c on h.CustomerID=c.CustomerID

/*

PID Name OrderQty SalesOrderNumber OrderDate TerritoryID

--- ------------------------------ -------- ---------------- ---------- -----------

878 Fender Set - Mountain 1 SO53960 2003-09-07 1

881 Short-Sleeve Classic Jersey, S 1 SO53987 2003-09-08 9

712 AWC Logo Cap 1 SO53987 2003-09-08 9

. . .

871 Mountain Bottle Cage 1 SO53426 2003-08-31 6

870 Water Bottle - 30 oz. 1 SO53426 2003-08-31 6

873 Patch Kit/8 Patches 1 SO53426 2003-08-31 6

(121317 rows total in 5.7 seconds)

*/

Oops… Sorry about that. I forgot the ORDER BY clause. Silly me!

Let’s add the ORDER BY and run it again:

select p.ProductMainID as PIDWHAT!!!??? Are you kidding me?? Just adding that ORDER BY made the time increase from 5.7 seconds to 24 minutes! The query duration increased by a whopping 25132%! Heck, I could probably sort 100,000 records myself manually with pencil and paper in under 24 minutes!

,p.Name

,d.OrderQty

,h.SalesOrderNumber

,h.OrderDate

,c.TerritoryID

from #Prods p

join #OrdDetail d on p.ProductMainID=d.ProductMainID

and p.ProductSubID=d.ProductSubID

and p.ProductSubSubID=d.ProductSubSubID

join #OrdHeader h on d.SalesOrderID=h.SalesOrderID

join #Custs c on h.CustomerID=c.CustomerID

order by p.ProductMainID

/*

OMIGOSH!!!

The addition of the ORDER BY made the query take 24 minutes!

*/

Here are the utterly shocking Profiler Statistics comparing the two queries:

/*

Description CPU Time Logical Reads Duration Time

---------------------------------------------------------------------------

Query Without ORDER BY 733ms 2,825 5,675ms = 5.7 Secs

Query With ORDER BY 1,426,536ms 46,102,492 1,431,938ms = 23.9 Mins

---------------------------------------------------------------------------

*/

So why is SQL Server so slow at sorting these rows?Well… it’s not really the Sort’s fault… it’s something else.

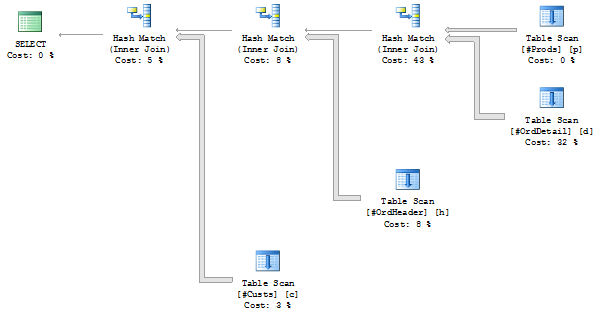

Take a look at the estimated plan of the first query without the ORDER BY:

All the tables are JOINed with Hash Join operators. That makes sense, considering there are no primary keys or indexes defined. A Merge Join operator would require pre-sorted (i.e. indexed) data and a Nested Loops Join operator would do a SEEK on its inner input (which requires an index). So a Hash Join is the best (and really only) choice to JOIN the tables.

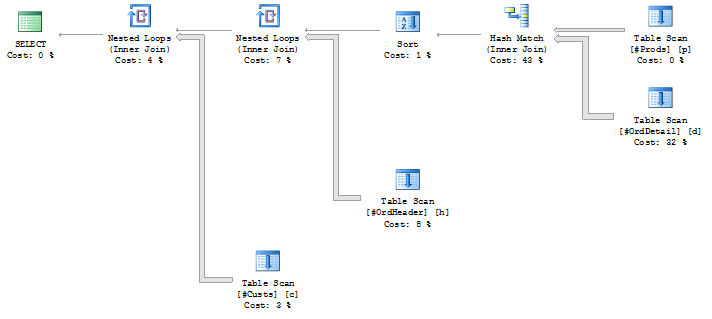

Now take a look at the estimated query plan with the ORDER BY added to the query:

Hmmm… The SORT operator is not at the top (i.e. left) of the tree but is instead placed further down the tree, with the JOIN of the #Prods and #OrdDetail tables. I guess that makes sense since we wanted the query to be ORDERed BY #Prods.ProductID. But the remaining Hash Joins got converted into Nested Loops Joins.

How come?

Notice the very thin arrow lines connecting the JOIN operators… in both plans above. The optimizer estimated that only 1 row (!!) would result from the JOIN of the #Prods and #OrdDetail tables. And because only 1 row is estimated, the optimizer figured it could just use a Nested Loops Join... because, hey, there’s only 1 row, and besides, a Nested Loops Join would preserve the order of the sorted rows, whereas a Hash Join may not.

But you and I know the reality of the situation is that there is not going to be only 1 row moving in the flow up the query tree… there are going to be 121,317 rows. The estimate is waaaay off.

Here’s what the Actual plan looks like for the 24-minute-long ORDER BY query.

Note the huge thick arrow lines now? They represent the actual number of rows processed. The Nested Loops Join to the #OrdHeader table performed a Table Scan of the 31465-row #OrdHeader table 121,317 times! So that resulted in 31465 * 121317 = 3,817,239,405 rows being processed. Similarly, a whopping 2,327,466,645 rows were processed out of the #Custs table. No wonder it took 24 minutes! Our friend Nora Nested-Loops must have been thoroughly exhausted at the end of this query!

So why the gross underestimation of the rows? Why did the optimizer figure that only 1 row would result in the JOIN of #Prods and #OrdDetail?

It’s those #$@*% multiple columns in the JOIN.

Take a look at how the estimates dramatically decrease as we add an additional predicate to the JOIN condition:

select p.ProductMainID as PIDThe optimizer does use statistics to attempt to estimate the row count, but the problem is that statistics are automatically created for each column individually… they are not created for the columns collectively. So when the optimizer sees two predicates in the JOIN condition (or three), it assumes the predicates are independent and therefore more and more selective.

,p.Name

,d.OrderQty

from #Prods p

join #OrdDetail d on p.ProductMainID=d.ProductMainID

/*

97783.6 Rows Estimated

*/

select p.ProductMainID as PID

,p.Name

,d.OrderQty

from #Prods p

join #OrdDetail d on p.ProductMainID=d.ProductMainID

and p.ProductSubID=d.ProductSubID

/*

156.38 Rows Estimated

*/

select p.ProductMainID as PID

,p.Name

,d.OrderQty

from #Prods p

join #OrdDetail d on p.ProductMainID=d.ProductMainID

and p.ProductSubID=d.ProductSubID

and p.ProductSubSubID=d.ProductSubSubID

/*

1 Row Estimated

*/

In reality, that is not always the case in the real world. For example, let’s say you had a JOIN condition based on Make and Model and Year of automobile. If we’re trying to join on (’Toyota’, ’Prius’, 2010), the optimizer would make estimates based on how selective ‘Toyota’ is among all other Makes and on how selective ‘Prius’ is among all other Models (regardless of Make). A Prius is very selective among all Models… it’s one of hundreds. But a Prius is not that selective among Toyotas… it’s only one of about a dozen or so.

So this is what I was dealing with in this database… Lots of multi-column JOINs between Heaps which totally annihilate performance because of bad estimates.

In my case I was not able to create any indexes… Don’t ask me why… I don’t want to talk about it… It was essentially outside the scope of the project.

I mentioned earlier that statistics are automatically created on individual columns referenced in JOIN/WHERE conditions if they don’t exist already, but statistics for collective multiple columns are NOT automatically created. The great thing about indexes, though, is that a multi-column index WILL create collective multiple-column statistics, so the optimizer can make much better estimates when dealing with multiple-column predicates. So chalk that up as another really good reason to use indexes.

Actually, we can manually create collective multi-column statistics on our own like so, and upon doing so, the query is much much faster:

create statistics Stat_PID_Main_Sub_SubSubWith those multi-column stats created, the estimated query plan now gets a very different shape:

on #Prods (ProductMainID,ProductSubID,ProductSubSubID)

create statistics Stat_PID_Main_Sub_SubSub

on #OrdDetail (ProductMainID,ProductSubID,ProductSubSubID)

go

select p.ProductMainID as PID

,p.Name

,d.OrderQty

,h.SalesOrderNumber

,h.OrderDate

,c.TerritoryID

from #Prods p

join #OrdDetail d on p.ProductMainID=d.ProductMainID

and p.ProductSubID=d.ProductSubID

and p.ProductSubSubID=d.ProductSubSubID

join #OrdHeader h on d.SalesOrderID=h.SalesOrderID

join #Custs c on h.CustomerID=c.CustomerID

order by p.ProductMainID

/*

2910ms Duration

*/

Unfortunately, creating these multi-column statistics was also outside of the scope of my project with this client.

What was I to do?

I ended up going with hints… Not the greatest solution, but one that I could apply quickly to the code.

You may recall a blog post I wrote in August 2010 regarding Query Hints. In one example in that blog post, I introduced a hint to force the optimizer to use a Nested Loops Join rather than a Hash Join.

In this case, though, in dealing with the Heaps and their multi-column joins, I ended up doing the exact opposite… I introduced hints to force the use of Hash Joins rather than those pesky Nested Loops Joins.

So let’s change the query to the following, specifying a Hash Join for each Join:

select p.ProductMainID as PIDThat doesn’t run quite so quickly as the query that had the advantage of the multi-column stats, but 9.7 seconds is certainly better than 24 minutes!

,p.Name

,d.OrderQty

,h.SalesOrderNumber

,h.OrderDate

,c.TerritoryID

from #Prods p

inner hash join #OrdDetail d on p.ProductMainID=d.ProductMainID

and p.ProductSubID=d.ProductSubID

and p.ProductSubSubID=d.ProductSubSubID

inner hash join #OrdHeader h on d.SalesOrderID=h.SalesOrderID

inner hash join #Custs c on h.CustomerID=c.CustomerID

order by d.ProductMainID

/*

9701ms Duration

*/

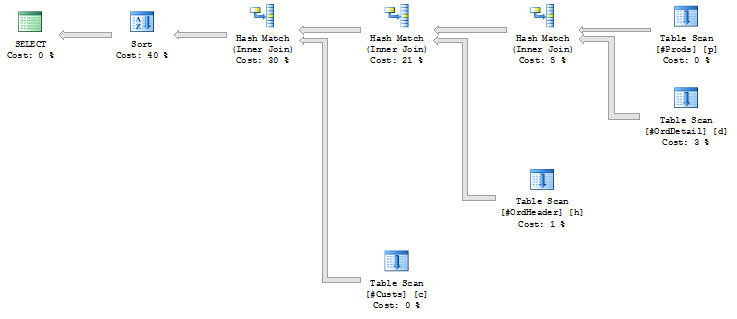

The estimated query plan for the Hash Join Hint query looks like this:

It’s pretty much just like the plan for the non-ORDERBY query, except it has a SORT operator thrown in to execute at the top of the tree, after all the JOINs. When the optimizer sees Hash Join hints, it forces the query to be processed in order of how the JOINs are introduced in the query, so it first JOINs the #Prods and #OrdDetail, then the #OrdHeader, then the #Custs table.

By the way, for those of you who want to save typing, the same thing could be accomplished via the OPTION clause (instead of specifying individual JOIN hints), forcing the optimizer to use Hash Joins for every JOIN in the query:

select p.ProductMainID as PIDWe could force the topology of the query plan to be just like the one that resulted from creating the statistics by rewriting the query like so:

,p.Name

,d.OrderQty

,h.SalesOrderNumber

,h.OrderDate

,c.TerritoryID

from #Prods p

join #OrdDetail d on p.ProductMainID=d.ProductMainID

and p.ProductSubID=d.ProductSubID

and p.ProductSubSubID=d.ProductSubSubID

join #OrdHeader h on d.SalesOrderID=h.SalesOrderID

join #Custs c on h.CustomerID=c.CustomerID

order by d.ProductMainID

option (hash join)

/*

9701ms Duration

*/

select p.ProductMainID as PIDThis forces the #Custs and #OrdHeader to JOIN first, then that result is JOINed to #OrdDetail, and then that result is finally JOINed to #Prods… just like the query plan that was able to use the multi-column stats. And it runs just as fast… 2.9 seconds.

,p.Name

,d.OrderQty

,h.SalesOrderNumber

,h.OrderDate

,c.TerritoryID

from #Prods p

inner hash join (#OrdDetail d

inner hash join (#OrdHeader h

inner hash join #Custs c

on h.CustomerID=c.CustomerID)

on d.SalesOrderID=h.SalesOrderID)

on p.ProductMainID=d.ProductMainID

and p.ProductSubID=d.ProductSubID

and p.ProductSubSubID=d.ProductSubSubID

order by d.ProductMainID

However, that code is really difficult to read and figure out what’s going on. (It’s kinda cool, though, that we have the flexibility to write it that way, dontcha think?)

So we’ll have to settle for the 9.7 second query.

But thank goodness we can bring about these changes in behavior with the use of hints. They can certainly be a godsend when you don’t have any other options available to you.

Update Dec09,2010: Paul White wrote an excellent must-read follow-up to this article, giving some wonderful additional insights. If you’re not a regular reader of his blog, I must insist that you subscribe to it immediately. It’s a gold mine of terrific material.

Excellent post! I worked on a kind-of similar project a few years back so understand your frustration.

ReplyDeleteWow, great post! A great example for the legitimate use of query hints!

ReplyDeleteI just checked your blog and I am cursing myself that why I didn't find it before on internet.

ReplyDeleteExcellent blog and of course great post

@David and @Anonymous:

ReplyDeleteThanks for the great feedback... I'm glad you enjoyed it.

@Roger:

Thanks! It was nice meeting you at PASS, though that other guy with you... Rob Something-Or-Other... was a little strange. I see that you started a blog recently... I'm adding it right now to my reader... Thanks again!

Nice dude. Thanks for sharing

ReplyDeleteLee Everest

www.texastoo.com

Great example, I learnt alot.

ReplyDeleteThomas

TheSmilingDBA

IntelliMindz is the best IT Training in Bangalore with placement, offering 200 and more software courses with 100% Placement Assistance.

ReplyDeletePython Course in Bangalore

React Course In Bangalore

Automation Training In Bangalore

Blue Prism Courseourse In Bangalore

RPA Course In Bangalore

UI Path Training In Bangalore

Clinical SAS Training In Bangalore

Oracle DBA Training In Bangalore

IOS Training In Bangalore

<a href="https://intellimindz.com/tally-course-in-bangalore/>Tally Course In Bangalore</a>

Estoy agradecido por el artículo. Tengo muchas ganas de leer más. Fantástico.

ReplyDelete

ReplyDeleteIt is always so interesting to visit your site.

ReplyDeleteThe blog has accurate information I like the way you have delivered the main point related

ReplyDeleteLoving the information on this internet site, you have done great job on the blog posts.